AI Factories can help lift the volume, success, value, and speed of AI innovation within organisations.

But there’s a speed hump on the horizon – the advent of agentic AI. This new AI paradigm will strain even the most mature AI factories, offering new opportunities and raising concerning risks.

We first wrote about AI factories as they began to emerge in late 2024; CommBank’s decision to set up an AI factory with Amazon Web Services was just one example of big organisations establishing AI experimentation systems. In 2025 and beyond, how will these AI factories need to evolve to keep up with agentic AI?

A recap – what is an AI factory?

In establishing AI factories for large ASX-listed entities, we have found different variants, each addressing a different need – all aimed at optimising some part, or all, of the AI lifecycle: from ideation through to experimentation and scale. They can take different forms – both technical (for instance, ML Platforms or blueprints for common AI use cases), as well as operational (such as a delivery or operating model).

Large enterprises often establish AI factories to govern the massive amounts of innovation taking place in their organisations. They look to leverage what is being built across different parts of their organisations in a consistent way, avoiding duplication and seeking opportunities to share learning. Importantly, they offer a consistent path from experimentation to production for AI.

Other organisations view AI factories as a kickstart for their AI journey – accelerating the innovation process to extract value faster. This approach tends to suit nimble organisations where a single AI roadmap is centrally managed.

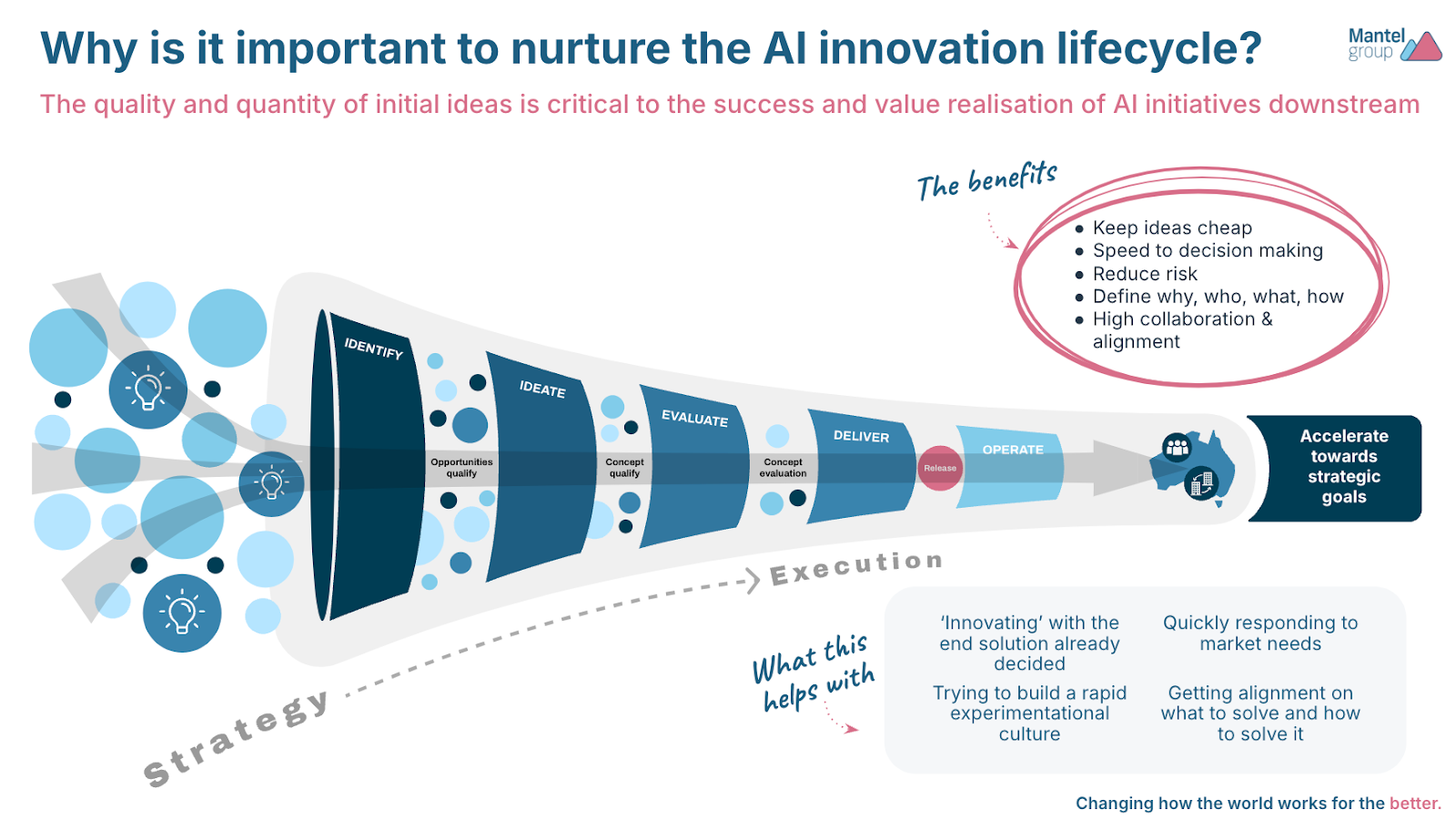

Regardless of the intent, the goal of an AI factory is to lift the volume, success, value, and speed of AI innovation within organisations.

Why do we need AI Factories?

The success and value realisation of AI initiatives have a direct correlation to the quality and quantity of ideas entering their AI innovation funnel.

The value of AI will ultimately be determined by the volume of use cases entering the funnel, the number of successful experiments exiting it, their total value, and the speed at which they progress through the funnel. An AI Factory can improve all of these factors.

Unlike in the past, where AI sat firmly within central data & analytics teams, today AI has been democratised, and touches every process in every part of the organisation. Everyone wants to do it, and everyone can do it.

What is agentic AI?

Agentic AI refers to a set of use cases that rely on Generative AI models operating in a way that is goal-oriented and autonomous.

A simple chatbot use case, where a user’s question gets converted into a query and sent to a static data source that sends information back, isn’t agentic. A chatbot that decides which data source is most relevant, answers the user’s query and performs follow-up actions in the correct systems on its own, is agentic.

For more information on agentic AI, see our recent whitepaper.

Why is it a big deal, and what does it mean for my AI factory?

Agentic AI throws a spanner in the works of even a mature AI Factory – including one that’s prepped for Gen AI use cases.

Agentic AI tooling has accelerated rapidly, and much of it is self-service, low or no-code. Agentspace by Google, Agentforce by Salesforce, and ServiceNow’s AI Agents platforms offer non-technical users the ability to create their own agents and connect them to useful data, systems, and other agents using out-of-the-box connectors. Many organisations will likely have one or a combination of these tools operating, with agents operating across them seamlessly.

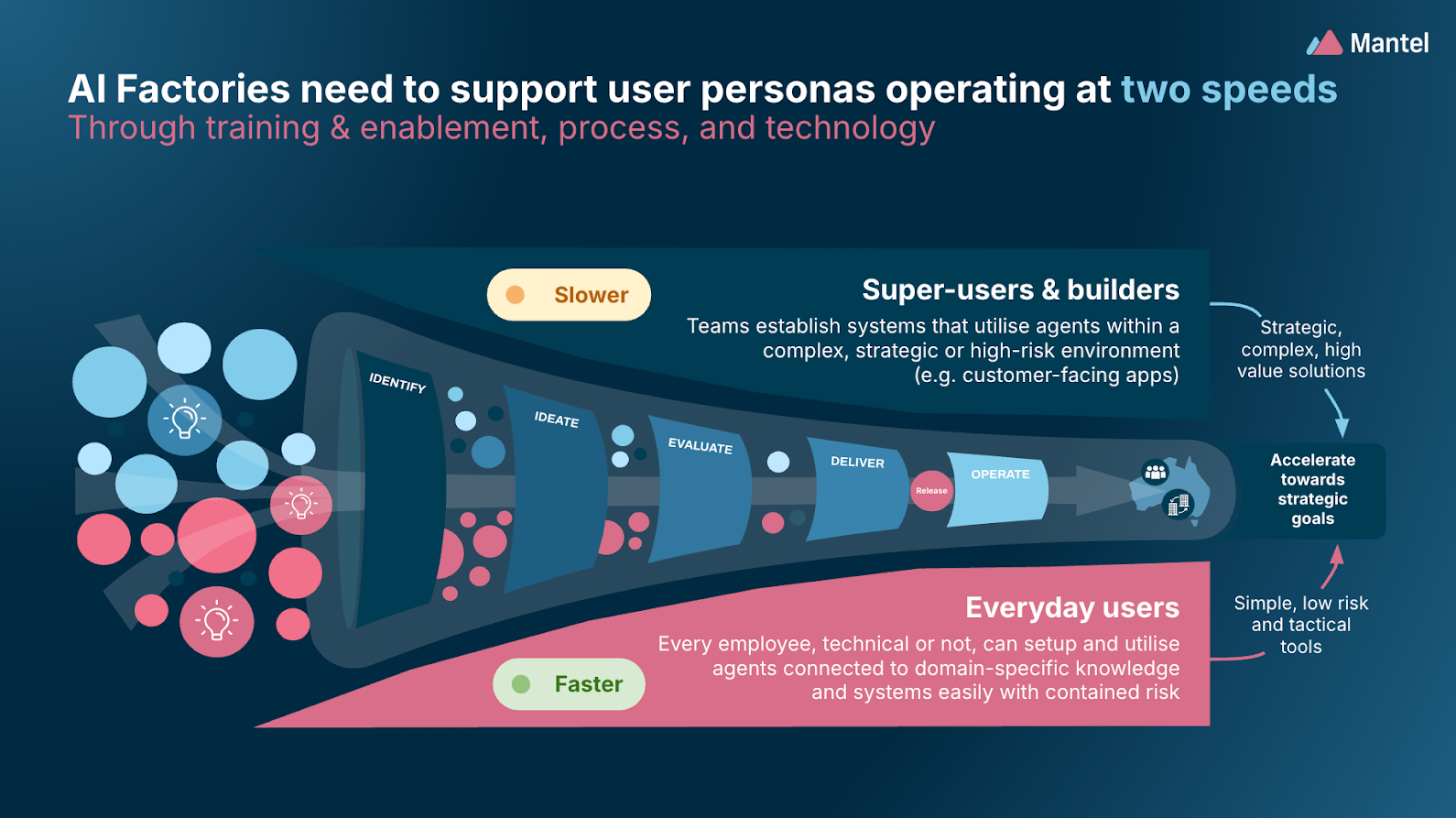

While these tools offer tremendous opportunity to organisations that are embracing the federated approach to AI innovation, their success will depend heavily on the diffusion of AI literacy and a clear path to production for every individual, technical or not. In short – new agentic tooling enables federation of AI, on steroids.

This means a few things for our AI factories:

- We need to double down on enablement and engagement – How AI Factories enable users will change, as there are differences in the kinds of use cases being pursued. Where previously the aim was to identify strategic and high-value use cases across the organisation with a focus on enabling teams involved in these initiatives, they must now support ordinary users building personal agents in a way that is effective, well-governed and secure.

- Demanding value and avoiding duplication – Running agents can be expensive. Providing central insight into the usage of agents across an enterprise can help surface common use cases, data sources, and potential for consolidation. While large, strategic use cases typically have associated product and technology ownership, agentic AI is often personal and specific to a user, and we will look to our AI Factory operating model to provide de-facto product ownership of agents for everyone, everywhere.

- AgentOps needs to be faster, better and more finely grained than MLOps – Agents offer several new vectors for security and risk teams to grapple with, and central capabilities need to be enabled with transparent tooling to identify risks fast, and shut down agents remotely.

The first concern is the fact that agents will be connected to applications which they can operate on autonomously – both reading & writing data. How we allow low-risk agents to proliferate, while limiting the scope and potential impact of higher-risk agents, is a big question.

Allowing agents to connect and operate autonomously at a use-case level for traditional AI or earlier Gen AI use cases is already a bottleneck for security teams – providing this service for each and every individual interested in creating an agent will make the current process nearly impossible.

The usage of agents will vary and should remain tightly governed, and this does not necessarily correlate with technical architectures or connected data sources. For instance, a banker may have an agent connected to credit data to aid in customer support conversations – an acceptable use case. If the same AI agent is asked to produce recommendations for lending decisions, this becomes problematic. On paper and to the organisation, the use case appears singular.

What are examples of things we can do to support an agent-enabled enterprise?

Identify common pain points and opportunities

For example, where the need for a ‘core’ set of agents is required either across the enterprise or within a specific team (e.g. an agent that reads my emails and drafts responses ready for the morning, or an agent specific to creating reports for my finance teams) will be key to delivering consistent value and performance for similar tasks, everywhere.

Build a central AgentOps platform that includes governance

MLOps platforms support data & AI professionals with managing models and solutions (both ‘traditional’ and Gen AI) at scale. AgentOps will be slightly different – these will need to be managed more centrally, and offer a more granular view of the kinds of agentic interactions happening across an enterprise. At Mantel we believe every prompt should be auditable, and every agent should have a kill switch. Surfacing agents and their activity, whichever platform they sit on, is an essential part of proper governance, and tooling such as securiti.ai already offers many of these capabilities off-the-shelf.

Enforce prompt-level security

Governing the usage of AI at a prompt level ensures a level of governance that cannot be exercised by considering only the data and connectors involved. Prompt-level security refers to tooling that can support enforcing organisational policy at the prompt level, preventing agents that are connected to legitimate data in legitimate ways, from being asked to do undesirable, unethical or risky things.

Develop an operational blueprint for agent security

Security operations at large organisations are already overloaded with requests to certify new AI tools and use cases. They aren’t all equipped with the right level of AI literacy nor are they appropriately resourced for the incoming agentic wave, where tens or hundreds of agents could be spun up by an individual, each requiring approval. There are several potential approaches to this – federating risk and security across the enterprise, defining blueprints for agents that can be fast-tracked through approvals, or enabling security teams with AI (or agents) that can help accelerate the process (yes – this is a can of worms we’re opening), may all play a role in security processes.

Questions for business leaders:

- What is the best operating model for AI and agentic AI in the organisation?

- How do we enable the rest of the organisation to safely and successfully experiment and deliver value with AI and agents?

- What tooling will enable agentic AI at scale?

- What is our strategy for managing AgentOps, as a separate challenge to MLOps?

- How do we establish the right kind of team structure and skillset to support AI innovation for both superusers, as well as everyday users?