Mantel Group prides itself on being a learning organisation, striving to promote technology vitality and prepare our clients so they can evolve and be successful. Each year we run a series of Tech Radar workshops with a range of engineers across web, mobile, API, QA and platform domains. They provide insights and perspectives from their diverse consulting experiences and research.

If you’d like to learn more about the Mantel Group Tech Radar and how it fits into our Tech Vitality process then Sangeeta Vishwanath, one of our Principal Engineers, describes it in detail in her article – Tech radar – Discover emerging tech trends.

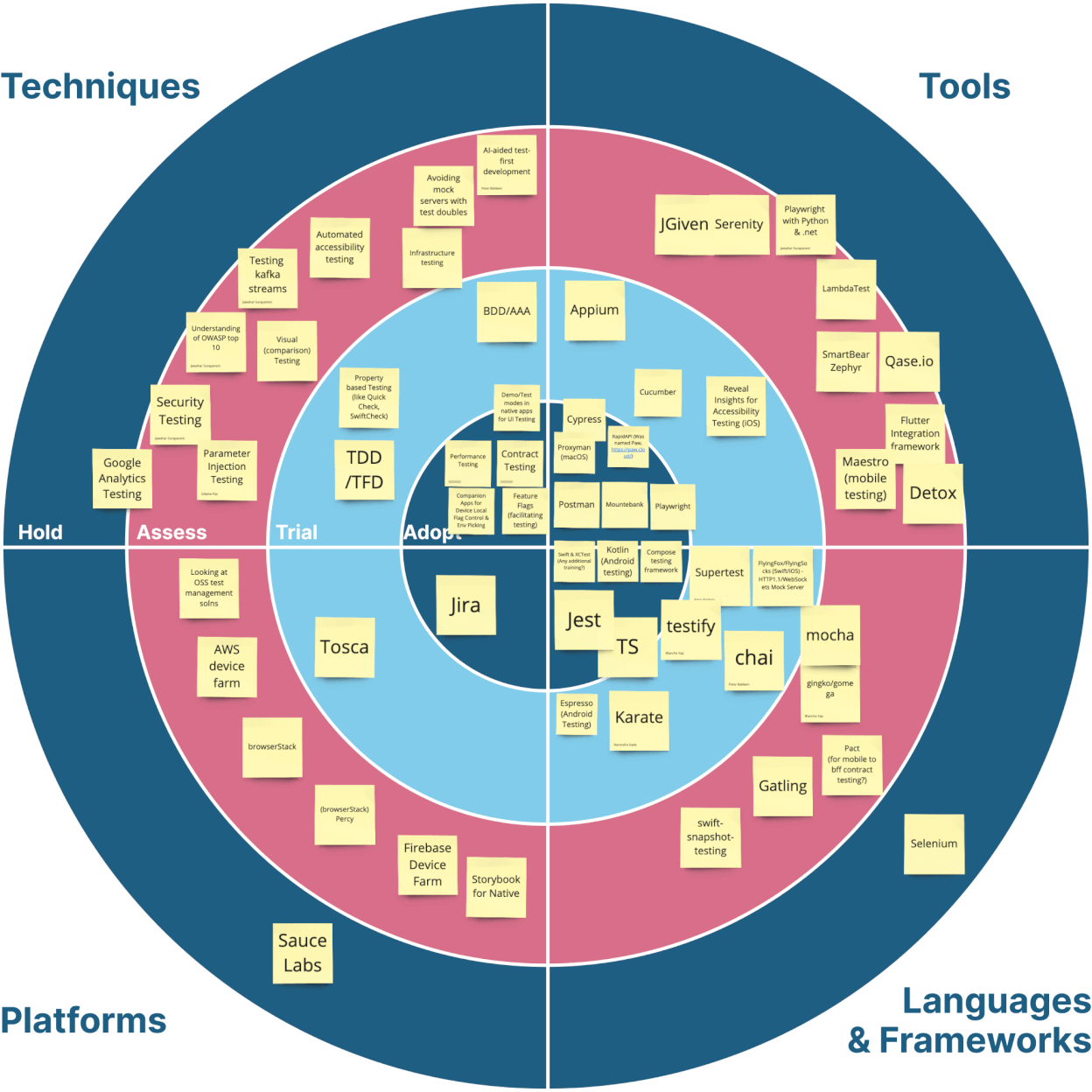

In this piece, we will review some of the key themes to come out of our most recent QA Tech Radar.

Key Themes

AI-Aided Tests and AI-Powered Tools

We’ve seen a large amount of interest this year in AI-powered tools helping developers write new or enhance existing tests. A popular topic that caught our attention is Thoughtworks’ concept of AI-aided test-first development.

This is where a tool like ChatGPT or Bard is prompted with parameters such as the tech stack, the design and architectural patterns, and the acceptance criteria. The tool then generates the tests based on these parameters. Once the tests are generated, the intended functionality is implemented.

Moreover, the testing landscape is rapidly changing and a variety of tools and frameworks have been released that we’d like to assess how they could aid in our QA practice.

API Testing

API and Contract testing continue to generate significant interest, with a particular focus on Load & Performance testing using frameworks like Locust and Gatling. PACT and OpenAPI testing continue to be popular topics. Additionally, we have identified the testing of Kafka streams as an area for assessment.

Security

Our clients typically have their own security teams and processes in place, so establishing these practices from the get-go is a rare occurrence. Nevertheless, given the prevailing cybersecurity landscape and the constant threat of malicious actors, there is a growing interest in security-conscious practices.

We are actively exploring various tools and practices such as OWASP (Open Worldwide Application Security Project) and SAST (Static Application Security Testing) to identify inadequate security measures, minimise human error, and protect valuable data. Our goal is to assess tools that will help our clients implement more robust security practices.

Mobile Testing

We’ve seen a steady rise in interest in native mobile testing tools and techniques. Maestro, a mobile UI testing framework garnered attention as an alternative to Appium and Detox. Additionally, automated accessibility testing on mobile devices has gained traction. We are actively evaluating different options and leveraging our web testing knowledge and experience to enhance our testing capabilities on native platforms.

Device Farms and Testing Platforms

Automated feature testing has seen a steady level of interest with established platforms like BrowserStack, Firebase Device Farm, and SauceLabs being adopted on client sites. The convenience of running automated feature tests from a CI/CD pipeline, as well as providing developers and testers with a way to test across a variety of browsers and devices virtually makes these platforms a popular choice.

LambdaTest has recently caught the attention of the team as a noteworthy addition to executing automated web and mobile tests.

Furthermore, we are exploring Open-source Test Management Platforms as viable options for smaller teams.

Web Frameworks

We’ve seen Playwright continue to be recommended to clients due to its great support, active development and unified API that allows it to easily automate cross-browser tests. The most common bindings are Node and Java, but Playwright also supports Python & .NET. This year we’ll be assessing the Python and .NET bindings with the goal being to produce some best practices and starter code to round out our expertise with Playwright.

Karate, an open-source testing framework offers a rich feature set including API testing, end-to-end testing, performance testing and mocking. It’s gained traction internally, and we aim to explore its potential by leveraging internal knowledge to create training material.

Languages

TypeScript has become the go-to for web and node-based applications in the last few years, and we’ve seen this trend continue with TypeScript being the preferred language for test automation across our Web, Node, and React Native projects.

In addition, Python has seen a surge of interest at this year’s tech radar sessions, likely due to the majority of AI and ML tools and frameworks utilising Python. We’ve seen this trend also reflected in the QA space.

Our Tech Radar Investment Priorities

Based on the output of our tech radar sessions, we have identified the following technologies as key investment priorities.

AI-aided Tests & AI-powered tools (Assess)

It’s common nowadays to see developers feed ChatGPT or Bard some code to automatically generate their tests. However, this approach has some drawbacks. It deviates from Test Driven Development (TDD) since the tools generate test code based on the provided feature implementation, which may result in functional issues or bugs.

To address these drawbacks, AI-aided test-first development comes into play. In this approach, ChatGPT generates tests for our code, and then developers implement the functionality accordingly. The key difference from the previous approach is that instead of feeding the code directly, a dev or QA provides a prompt describing the tech stack and design patterns (known as a ‘fragment’) that can be reused across multiple prompts. They then describe more details of the feature along with the acceptance criteria. Based on this information, ChatGPT generates an “implementation plan” that aligns with the design patterns and architectural style of the tech stack. Lastly, it’s up to the developer to sanity-check the implementation plan, generate the tests and implement the functionality.

This AI aided form of Test Driven Development is an interesting concept that we are keen to explore further.

Additionally, there’s also been an influx of new AI-powered tools in the QA and testing landscape, and we’re keen to explore and experiment with these tools. However, it is crucial to consider what legal and security risks are associated with sharing potentially sensitive information with these tools. Our goal is to create recommendations and best practices when it comes to working with these tools.

API Testing

Locust (Adopt)

Written in Python, Locust claims to be an easy-to-use, scriptable and scalable performance testing tool.

Locust takes advantage of running each user within its own greenlet (a lightweight process/coroutine). This approach allows you to write your tests in regular (blocking) Python code, without the need for callbacks or other alternatives. By writing your scenarios in Python, you can leverage your preferred IDE for development and easily version control your tests as standard code, unlike certain tools that rely on XML or other binary formats. Because of its headless approach, it’s easily applicable and flexible in multiple environments including CI/CD.

It’s an interesting lightweight framework that has gained traction internally. We’ll be leveraging internal knowledge of it to create some training material.

Gatling (Assess)

Within the Java ecosystem, Gatling serves as a robust load-testing solution for applications, APIs, and microservices. It enables the generation of hundreds of thousands of requests per second while offering high-precision metrics. The tool follows a Load test as code approach, simplifying implementation and maintenance. While Gatling is primarily an open-source tool, it also provides an enterprise version that is useful to certain clients.

Gatling utilises its own Domain Specific Language (DSL) to ensure the readability of test scenarios for all users. The Java DSL is also compatible with Kotlin. It is conveniently provided as a standalone bundle, allowing launching from the command line, and can also be seamlessly integrated with popular build tools such as Maven or Gradle.

We decided that it’s worthwhile to assess how it stacks up and compares to adopted tools like K6 and JMeter in the form of a technical assessment.

Testing Kafka Streams (Assess)

Kafka Streams is a lightweight, scalable and distributed stream processing library within the Apache Kafka ecosystem. It’s an effective tool for real-time data processing, event-driven architectures, and seamlessly integrates with Kafka. It offers scalability, fault tolerance, stateful operations and a developer-friendly API.

For these reasons, it’s remained a popular library within Mantel Group. We’ve decided to invest in developing a solution accelerator that includes best practices and code examples for testing Kafka streams.

Security Testing (Assess)

Recent high-profile data breaches have made organisations more concerned about the risks and consequences of having their data stolen. At Mantel Group, we’re keen to understand what tools and techniques are available in order for us to recommend to our clients, and have a strong opinion on the pros and cons of each one.

Static application security testing (SAST) and Dynamic application security testing (DAST) are both methods of testing for security vulnerabilities but are used differently during the software development lifecycle.

SAST is a white box method of testing wherein the source code is examined to find software flaws and vulnerabilities such as SQL injection and others listed in the OWASP Top 10.

DAST is a black box testing method that examines an application from the outside in. It has no access to the source code but rather tests it to find vulnerabilities much like how an attacker could exploit your software.

Both methods are required for good security practices, however, due to the nature of our consulting where often DAST testing may already be in place, SAST is an area that allows us to “shift left”, wherein we move quality assurance and vulnerability scanning earlier in the development process.

We’ve identified this as an area where our teams can provide value faster (e.g. automatically scan code committed to a feature branch as part of a CI/CD pipeline). We are keen to assess what modern tools, techniques and frameworks are available that we can recommend to our clients.

Mobile Testing

Maestro (Assess)

We’ve continued to see growth in cross-platform mobile development, along with a consistent increase in the development of dedicated native apps. It’s become even more important to find automated testing solutions that have support for not only native platforms but also cross-platform builds including React Native and Flutter. In the past we’ve had success with both Detox and Appium.

Detox, a grey-box e2e and automation library, is geared mostly towards React Native builds, while Appium on the other hand evolved from Selenium and allows for truly cross-platform testing.

Appium offers greater flexibility. It’s available for iOS, Android, React Native & Flutter. However, because of its flexibility it has some drawbacks. It can be at times difficult to implement due to the number of options available, and providing coverage for both iOS and Android in a single test spec can be challenging.

Enter Maestro, a mobile UI testing framework built from the learnings of its predecessors (Appium, Espresso, UIAutomater, XCTest) and supports native as well as cross-platform builds. It allows for easy definition of your test cases called ‘flows’. These are conveniently written in .yaml format, making them simple to reason with and provide a low barrier to entry due its declarative nature.

This, amongst features like built-in tolerance to flakiness and delays, has made it significantly appealing to us, and we’re looking forward to doing a technical assessment as to how it compares to Appium and Detox.

Espresso (Trial)

Espresso is a Google-backed framework available in the Java ecosystem for automated Android UI Testing, which makes it easy to integrate into your existing Android apps. The core API is small, predictable and easy to learn while remaining open for customisation. As an Android-native tool, UI testing with Espresso is familiar to many Android developers making it easy for them to create feature-rich test automation with just a few lines of code. It also supports testing hybrid apps (where native UI components interact with WebView UI components) via Espresso Web.

Due to its feature richness, and native Android support, we’ll be creating a solution accelerator in the form of sample code, along with best practices and recommendations for sharing across our QA practice.

Automated Accessibility Testing (Assess)

The level of accessibility we build for greatly varies depending on the industry, product type, and overall accessibility goals. Whether it’s conforming to WCAG (Web Content Accessibility Guidelines) level AAA, AA or below, certain tools are available to assess your software for any issues against your pre-defined accessibility conformance standards.

However, these tools can have certain limitations. Specific tools may not capture all accessibility issues and might produce false positives. Moreover, no single tool comprehensively addresses all accessibility aspects. Despite these limitations, there are positive aspects to consider.

Automating your accessibility tests is a good practice to adopt, and offers numerous benefits. It enhances efficiency by enabling reproducible scans or tests that can be executed manually or integrated into a CI/CD pipeline. It facilitates early detection of accessibility issues, helps promote accessibility, and most importantly, leads to enhanced user experiences for individuals with disabilities.

Several tools currently exist that we have adopted in various forms over the years, namely, Google Lighthouse, and Axe. This year we’ll be investing time into assessing more tools and techniques, comparing web and native options, and creating documentation based on these outcomes.

Reveal Insights for Accessibility Testing (Trial)

It can be challenging to test native apps for accessibility violations. And so naturally this topic was also discussed at length during this year’s tech radar session.

Itty Bitty Apps, one of Mantel Group’s brands, brings dedicated experience in building native apps; from design, and code, to testing and launch. They’ve built a dedicated desktop app, Reveal, to inspect and verify your iOS app.

Reveal allows you to inspect iOS apps at runtime. A feature-rich inspector allows you to browse, filter and inspect your app’s accessibility interface elements, as well as simulate VoiceOver and Voice Control interfaces, to help find any accessibility flaws in your apps.

It’s a popular tool within the community, and we’re keen to start adopting Reveal in our native iOS projects. We’ll be working closely with the Reveal team to create training material that will help us adopt the product.

Device Farms and Testing Platforms

LambdaTest (Assess)

A relative newcomer to automated testing platforms is LambdaTest.

A Cloud-based platform that offers both real and virtual devices capable of running Selenium, Cypress, Puppeteer, Playwright and Appium. It supports both manual and automated tests, providing a wide range of testing options for native mobile and web applications.

This year we’ve continued assessing these device farms with BrowserStack, SauceLabs and LambdaTest being actively reviewed.

For an in-depth analysis of these platforms, check out David Osburn’s blog post: LambdaTest vs BrowserStack vs SauceLabs: Testing Tools Analysis

Open-source Test Management Solutions (Assess)

A Test Management Solution, often called a TCMS (Test Case Management System) is a tool or platform that enables centralised test planning and organisation, test case execution and management, and defect management, just to name a few. This is an appealing option for some of our teams and clients to streamline test planning, execution, and reporting. Furthermore, it results in improved test coverage, better defect management and increased overall software quality.

Some popular options include Zephyr, Qase, and Xray. However, as these are all paid offerings, we decided to invest some time in identifying any Open-source offerings. We’ll weigh up the pros and cons, and assess when they could be viable options, particularly for smaller teams with budget constraints.

Web Frameworks

Playwright with Python / .NET (Assess)

Playwright continues to see high levels of adoption and remains a popular choice for end-to-end testing web apps due to its flexible API and cross-browser and cross-platform support.

We’ve continued to invest time in writing documentation and providing some sensible defaults for our Playwright web projects using TypeScript. Having this consistent approach has been successful.

Playwright also supports bindings for .NET and Python. We’ve seen some demand for these languages across our clients, so it makes sense to also provide best practices and recommendations for these languages with Playwright.

We’ll be creating some documentation and boilerplate code for .NET and Python to offer holistic expertise with this framework.

Karate (Trial)

Another testing framework that has gained traction internally is Karate, an open-source unified test automation framework that combines API integration testing, API performance testing, API mocking and UI testing.

With the Karate framework, testers without a programming background can perform tests more easily. Karate describes itself as “Figma for testing” – enabling real-time collaboration, simple enough for anyone to get started in minutes.

Having a single framework that offers all these benefits is an interesting concept and we’re keen to explore this further. The team will be focused on leveraging internal knowledge of the framework and creating some training material to broaden the understanding across our QA practice.

What’s next?

These investment recommendations are a key input to our technology strategy. This strategy informs our recommendations to our clients and specifies the activities that we will perform for the next 6 months. These activities include:

- Identification, creation and delivery of training using a mix of online, study group and facilitated formats. Revise communities of practice around technology domains.

- Definition and execution of technology assessments as short focused projects which deliver findings and recommendations for adoption.

- Nurturing of solution accelerators by providing owners with time and support to develop ideas and test them with regular checkpoints to decide whether further investment is required and justified.

Over the course of the year we’ll apply the outputs to our solutions before repeating the process again.