INDUSTRY

Health Services

SERVICES WE PROVIDED

Cloud native application development

Integration services

Identity Authentication

Data Persistence

Business Services

Containerisation

CI/CD

Key Takeaways

”"The lack of true technology to support the central monitoring process had been a blocker for years. It’s truly exciting to start operating with this new model."

Senior DirectorData & Scientific Services

Company Overview

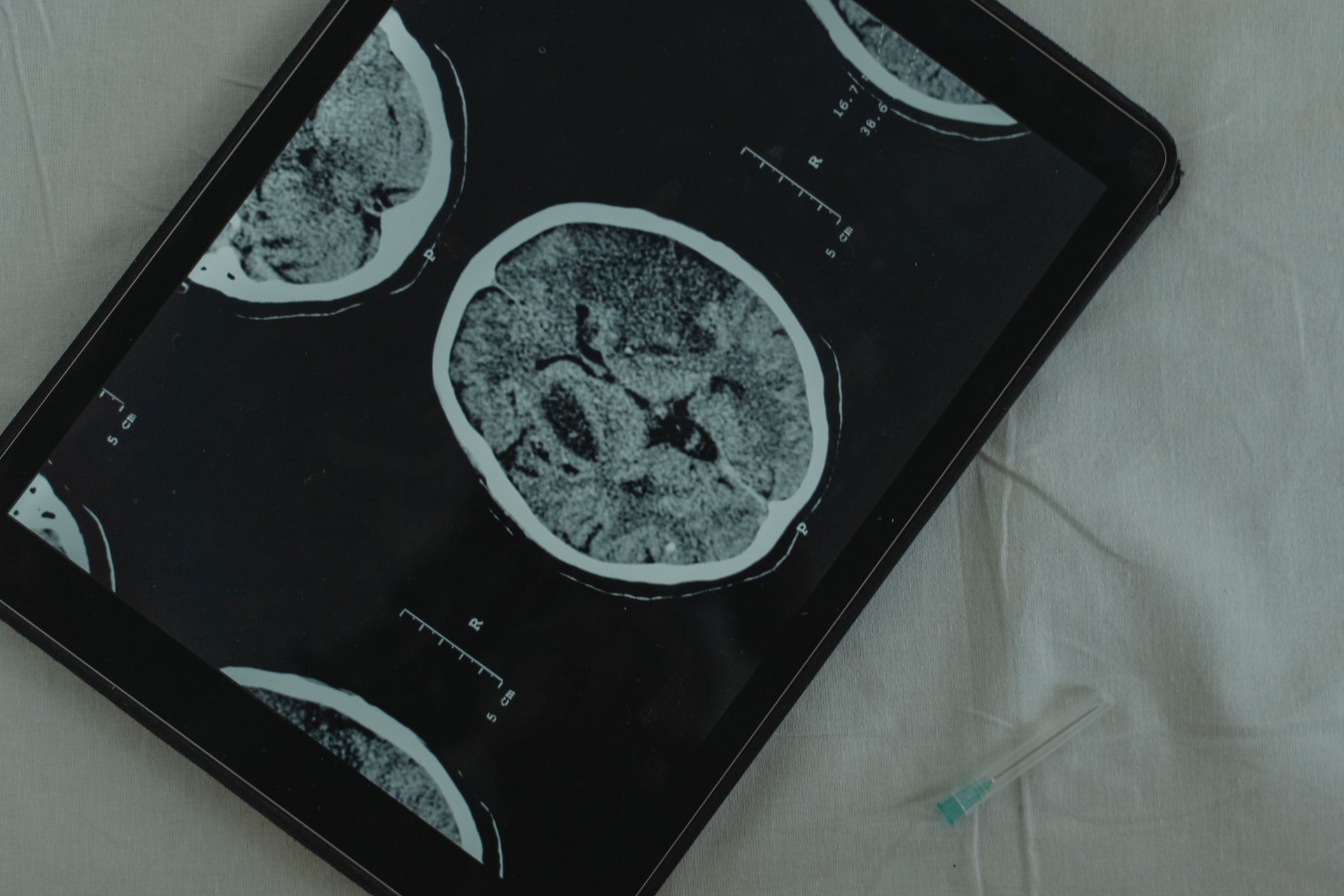

A leading neuroscience technology company optimising brain health assessments to advance the development of new medicines and to enable earlier clinical insights in healthcare. 80% of their focus is on Alzheimer’s disease and helping pharmaceutical companies find drugs that can help people suffering from such a crippling disease.

They continue to innovate their consultative services by further enhancing their technologies to provide rapid, reliable and highly sensitive computerised cognitive tests and support electronic clinical outcome assessment (eCOA) solutions and replace costly and error-prone paper assessments with real-time data capture.

The Problem

The customer’s process existed to help better understand and improve treatment of neurological disorders, measuring cognitive decline as part of world-leading clinical trial studies by ensuring the highest levels of performance by the people carrying out and capturing data consistently for these studies which are referred to as raters. This process was largely manual and required team members to use various disparate systems and with multiple handoff points to different users/roles in order to centrally monitor a rater’s performance. This led to inefficiencies and integrity issues in end to end processing which had the potential to compromise brain health outcomes of patients involved in trials. The customer identified the main areas that needed to be addressed:

- Simplification and data accuracy – Most of the data was previously manually transported between individuals and clinics via Excel / CSV files and other disparate systems.

- Operational inefficiencies – Siloed and manual processes had led to higher costs and waste.

The solution

Mantel Group and customer embarked on this 9 month project by breaking the work down into three key iterative phases:

- Discovery

- MVP

- Productionisation

An initial 2 week Discovery phase was undertaken to conduct an in-depth analysis of requirements, user journey mapping workshops, developing UX wireframes, assessment and prioritisation of features in order to provide the customer with high level estimates to feed into the internal business case.

During the MVP phase, the team was focussed on creating a release to support a single study through an automated workflow. Mantel Group and customer SMEs worked collaboratively to iterate on requirements to ensure the right outcomes would be delivered.

The release of the MVP into production gave the business confidence that the solution could support key workflows and deliver operational efficiencies through a more streamlined process. It was also an opportunity to gather feedback and prioritise features for the subsequent phase.

The Productionisation phase was focused on supporting more studies. This phase would enable further operational efficiencies, deliver performance improvements and increased support for scalability. There were some additional features to provide greater visualisation of data and progress, improved intelligence around automation, increased auditing and customisation to support new studies of strategic importance to the business.

Technical solution

- Frontend UI using Blazor WebAssembly with .NET 6 and C# 10

- Backend WebAPI using ASP.NET Core with .NET 6 and C# 10

- Clean Architecture for software implementation

- Followed a modified version of Microsoft Scalable Web Application reference architecture

- Automated code generation for service clients that are responsible for communication between frontend and backend applications using OpenAPI and NSwag

- Automated email from the application as part of workflows using Send Grid

- Implemented containerised worker services to run automated background processes based on event triggers to processes heavy data inputs, and update the end application with the status and data is its processed

- Infrastructure as Code developed using Terraform to provision Azure Resources, Network, and Azure Active Directory configurations

- Built CI/CD pipelines to build and then deploy the application, worker processes, and other resources into the development/staging/production environments

- Implementation of Authentication, Authorisation, Data Persistence, Business Services

- Implementation of complex business workflows to cover a number of scenarios

Key products, services and techniques used

- Azure services: Key vault, Application insights, App service, Storage accounts, AZ Functions, Event grid, SQL DB, virtual network with private endpoints, 2 x Containers running background service

- Frontend UI using Blazor WebAssembly with .NET 6 and C# 10

- Backend WebAPI using ASP.NET Core with .NET 6 and C# 10

- Clean Architecture for software implementation

- Modified version of Microsoft Scalable Web Application reference architecture

- User story mapping and UX wireframing

- Agile delivery through weekly sprints

- Virtual meetings / working across multiple timezones

- Infrastructure as Code developed using Terraform to provision Azure Resources, Network, and Azure Active Directory configurations

- Bitbucket (code repo), Teamcity (build and test), Octopus Deploy (deployment)

The outcomes, results and benefits

- The team that oversees the process has reduced its team headcount by two and expects to reassign a further four team members once further studies/trials have been onboarded to the application

- Improved data quality as the automated process has eliminated nearly all the accidental data errors

- Faster, higher quality data extracts with better auditing increases trust and confidence

- Realise a commercial opportunity that would leverage the application

- Integration to the data lakehouse in order to identify cross-study insights

- Feedback and collaboration from the customer SMEs during the agile development process, with lots of excitement from customer’s staff with respect to time savings and ease of use.