Written by Dhivya Kanagasingam

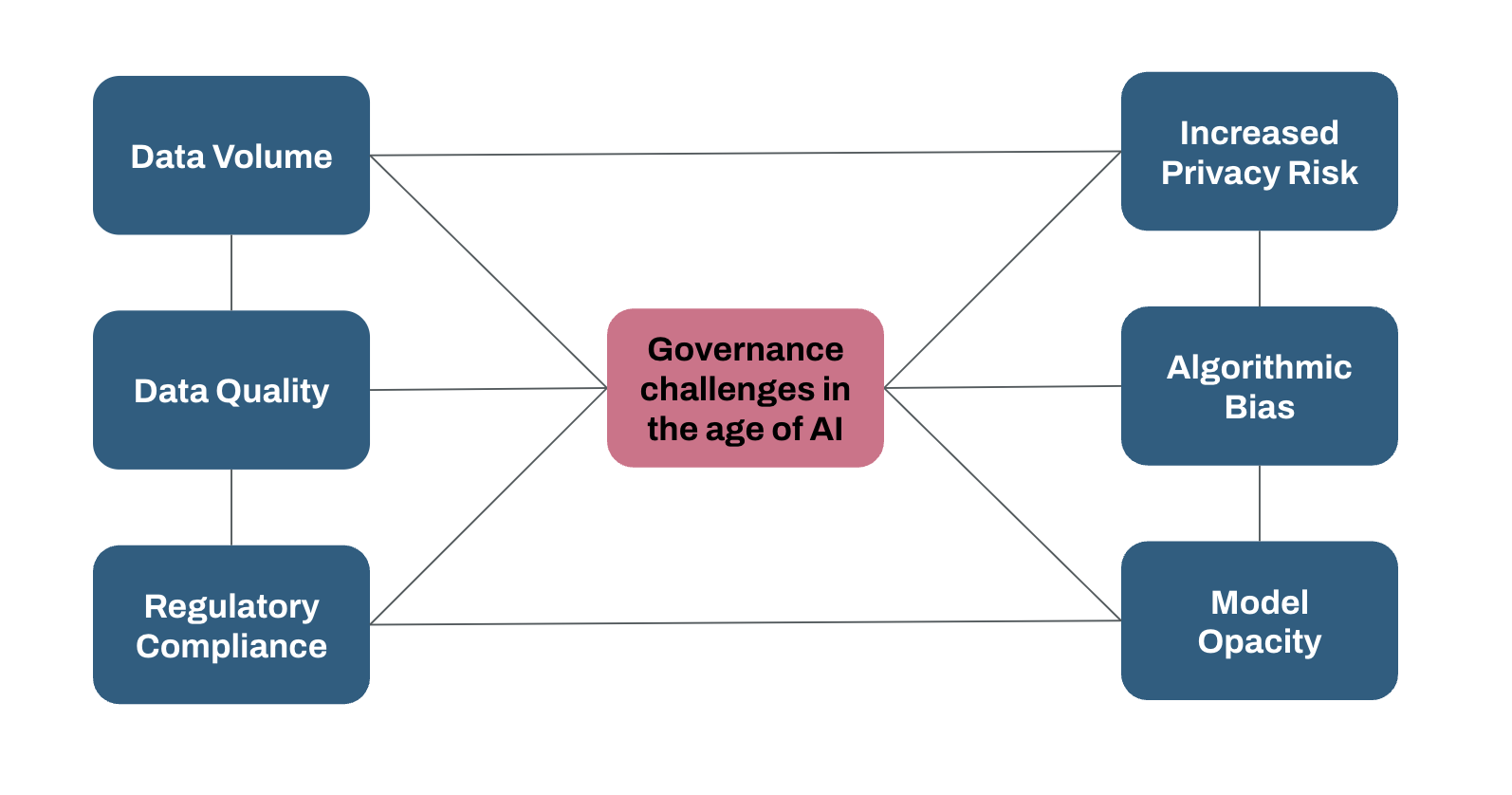

In today’s digital age, data and artificial intelligence (AI) have become the driving forces behind innovation and success for organisations across various industries. However AI, with all its transformative potential, can’t miraculously mend a system that is flawed or poorly maintained. Additionally, AI introduces novel challenges and additional layers of complexities which can make governing data difficult.

If businesses want to harness the power of AI to make data-driven decisions, the need for a holistic approach to data and AI governance has never been more critical.

6 Challenges and Complexities AI Introduces

to Data Governance

1. Organisation need to adapt, scale and future proof data infrastructure and management for AI

1. Organisation need to adapt, scale and future proof data infrastructure and management for AI

AI systems demand substantial data for training and fine-tuning, placing significant pressure on existing data storage and processing capabilities. Managing the extensive datasets required for AI can be a logistical challenge, encompassing data storage, transmission, and processing speed considerations. This requires organisations to adapt, scale and future proof not only their data infrastructure but their data management too. Trying to solve data volume challenges by simply throwing more processing power if data isn’t managed efficiently can become unsustainable very quickly.

Governance can help ensure efficient data management by setting and enforcing standards for data quality, access, security, lifecycle management, resource allocation, and compliance.

2. Prioritising data quality when trying to leverage AI is essential

AI’s effectiveness is closely tied to data quality. Inaccurate or poor-quality data can lead to flawed models and unreliable results. Maintaining data quality isn’t a one-time task but an ongoing commitment. Data cleansing, validation, and quality control processes must be seamlessly integrated into AI governance to preserve the integrity of AI models.

3. Navigating the regulatory compliance maze

AI models frequently work with sensitive data, adding another layer of complexity: regulatory compliance. Navigating the intricate legal and regulatory landscape governing data privacy and AI is essential, as non-compliance can result in severe penalties and reputational damage. The Federal Government of Australia’s response to the Privacy Act Review Report indicates a shift towards a more rigorous privacy regime, aligning with global standards like the GDPR.

The proposed reforms, such as imposing stricter obligations for personal data collection and extending the Privacy Act’s scope, including to small businesses, demonstrate the need for organisations to stay informed and adapt. This evolving Australian regulatory environment underlines the importance for organisations to maintain operational efficiency while ensuring compliance to guarantee their long-term viability in an increasingly regulated domain.

4. Responding to increased privacy risks exacerbated by AI

The incorporation of personal or sensitive data into AI models heightens privacy risks. AI systems can inadvertently disclose sensitive information or be susceptible to data breaches, even when working with de-identified data. Striking a balance between leveraging valuable data for AI and safeguarding individuals’ privacy is a complex challenge. The implementation of robust privacy-preserving & security techniques is crucial to mitigate these risks.

5. Fostering trust through transparency

Numerous AI models, particularly deep learning models, operate as enigmatic “black boxes,” rendering their decision-making processes inscrutable. The lack of model transparency poses challenges in explaining model outputs, particularly in scenarios where accountability and transparency are pivotal. Techniques for enhancing model explainability must be developed and seamlessly integrated into governance practices.

6. Confronting bias and rooting out prejudice in AI systems

AI models can unintentionally perpetuate biases present in their training data, resulting in discriminatory or unjust outcomes. Detecting and mitigating algorithmic bias necessitates a multifaceted approach involving data preprocessing, fairness assessments, and continuous monitoring. It also entails a comprehensive understanding of the societal and ethical implications of algorithmic decisions.

9 Ways to Master Governance in the Age of AI Through a Unified Approach to Data and AI Governance

To navigate the new and exacerbated challenges AI introduces in the governance of data, a unified approach to data and AI governance is essential for enhanced efficiency and effectiveness in organisational processes. Robust AI governance relies on a strong foundation of data governance; the two are interdependent, as data governance alone cannot address the diverse risks associated with AI.

To navigate the new and exacerbated challenges AI introduces in the governance of data, a unified approach to data and AI governance is essential for enhanced efficiency and effectiveness in organisational processes. Robust AI governance relies on a strong foundation of data governance; the two are interdependent, as data governance alone cannot address the diverse risks associated with AI.

A unified approach maximises the impact of data and AI governance, creating a comprehensive and adaptive organisational framework.

In the future, a unified data and AI governance approach might include the following strategies:

1. Creating a comprehensive data and model catalogue for holistic governance

A comprehensive catalogue that includes metadata and lineage information for both data and AI models. This catalogue serves as a central repository for all data assets and AI models, making it easier to track, access, and manage these assets and the relationships between assets. It should include information on data sources, data types, model versions, training data, and model performance metrics. Metadata may also include information on the data’s sensitivity, ownership, and compliance requirements including data collection terms.

2. Advancing privacy protection standards for AI

Enhanced measures for safeguarding data privacy, particularly when using sensitive or personal information in AI applications. Implement encryption, data anonymisation, access controls, and privacy-preserving techniques to protect individuals’ data and ensure compliance with data protection regulations like the Privacy Act.

3. Leveraging actionable insights for effective monitoring in AI systems

Real-time monitoring and reporting tools that provide actionable insights into the performance, security, and compliance of AI models and data. These insights help organisations identify and respond to issues promptly, whether they involve model drift, data breaches, or compliance violations.

4. Ensuring continual data excellence through ongoing quality validation

Regular validation and quality checks for data used in AI models to ensure that it remains accurate, reliable, and fit for purpose. Automated data quality validation processes that identify and flag issues like missing data, duplicates, and anomalies.

5. Proactively managing risks associated with AI models for safer and more responsible outcomes:

A structured approach to identifying, assessing, and managing risks associated with AI models. It involves evaluating the potential impact of model errors, biases, or ethical concerns and developing mitigation strategies. Proper due diligence before models are productionised Tracking model performance and retraining models as needed to manage risks effectively.

6. Utilising enabling tools to drive excellence in data and AI governance

Tools and technologies that support data and AI governance efforts, such as data lineage tracking software, AI explainability tools, model version control systems, and data governance platforms. These tools enable organisations to implement governance practices more effectively.

7. Conducting regular audits to ensure AI systems are meeting responsible AI standards

Regular audits of AI models to assess their compliance with ethical guidelines, fairness, accuracy, and legal requirements. Audits help identify and rectify issues in model performance and data usage that may not be evident through automated monitoring alone.

8. Integrating human oversight to enhance accountability

Defined processes for human intervention and decision-making when AI models encounter complex or ambiguous situations. Clear lines of accountability to ensure that individuals are responsible for model outputs and can take corrective action when necessary.

9. Harnessing the power of cross-functional collaboration for effective governance:

Collaboration between different teams and departments within an organisation to ensure a holistic approach to data and AI governance. These teams should include data scientists, data engineers, legal, compliance and responsible AI experts, and business stakeholders who work together to address governance challenges, weighing business outcomes and solving technical, regulatory and ethical risks that may arise.

As we chart the course ahead for the governance of data and AI, a unified approach might become not just a strategic choice but a necessity for organisations aspiring to harness the full potential of AI. Successfully scaling AI rests on not only understanding the intricacies of data and AI governance but actively shaping a robust and adaptive framework to navigate the evolving landscape.

Want to learn more?

Should you require assistance in navigating the complexities of AI Governance or its integration with your established Data Governance frameworks, please feel free to reach out to us.