Intro: Microservices, orchestration, an assessment

Microservices have become a popular architectural paradigm when building large complex systems. The appeal of building microservices comes from the idea that dividing functionality into smaller services facilitates distribution of work amongst engineers. Thus, changes to the system can be quicker and organisations can get their products out to market faster.

But there are downsides compared to a monolith. Monitoring, central retention of data, and a single point of control for flows are all trickier to perform when functionality is distributed across multiple services.

In an ideal world, we would love to have the best of both.

When implementing complex business processes in a microservices architecture, we can choose to go down two distinct paths. We can let services themselves call their successors in sequential order, potentially using queues or intermittent tools to allow for load balancing and retries. Or, we can have a central controller orchestrating the entire flow.

In recent years, these two distinct approaches have become known as Choreography and Orchestration^. Is it better to design our microservices like a choreographed group dance where each dancer knows their part, for whom to wait, and with whom to swap places? Or, will it be neater to design our microservices like an orchestra, where all instruments stop, wait and start to the conductor’s movement of the baton? In this blog series we are going to explore the latter.

In our end of year Mantel Group Tech Vitality Review for 2022, we identified orchestration as an area of focus. This resulted in an assessment piece around validating the benefits of orchestration so that we can provide our clients with recommendations and insights around tooling. In addition, we chose a couple of promising tools to compare and built a basic Proof of Concept to test them individually, with real code.

In this first part of our blog series, we will give you an overview of when and why you should consider orchestrating your microservices, and if so, what criteria you can use to evaluate the required tech.

^To better understand Choreography vs Orchestration, have a skim of Google’s couple of blogs. If you need more, here are a few other suggestions to look at from dev.pro, orkes and accionlabs.

Deep dive: Orchestrating microservices, when and why

We are already familiar with microservices, which means that we are also already accustomed to the pain points that come with it. Trialing a new paradigm, means needing to get used to new variables. So adding orchestration should really only be considered when the benefits outweigh the challenges.

Some use cases may not necessarily be ideal for orchestration. Orchestration does bring in new complexity, therefore, scenarios where we don’t have a lot of business logic, or we just have a short and simple sequence, may not be worth the effort of adding anything new to the mix.

In addition, use cases where services that fail cannot be fixed and resumed and the entire sequence must be restarted, would probably gain less from an orchestrated architecture. This is because we wouldn’t care about where we failed, we just care that we failed, and therefore we wouldn’t need a birds eye view on that sequence as long as we have a mechanism to restart it.

To determine what use cases would be suitable for orchestration, we can think back to what it offers us – a way to see the bigger picture from a central point and overview the entire operation. This means that long and/or complex sequences of tasks, potentially with decisions, forks, joins or near-identical sub flows would be significantly simplified if orchestration is added to replace most connective services. This means that each of the microservices will only need to care about what they need to perform, and any logic that is associated with the overall flow will be removed from their scope.

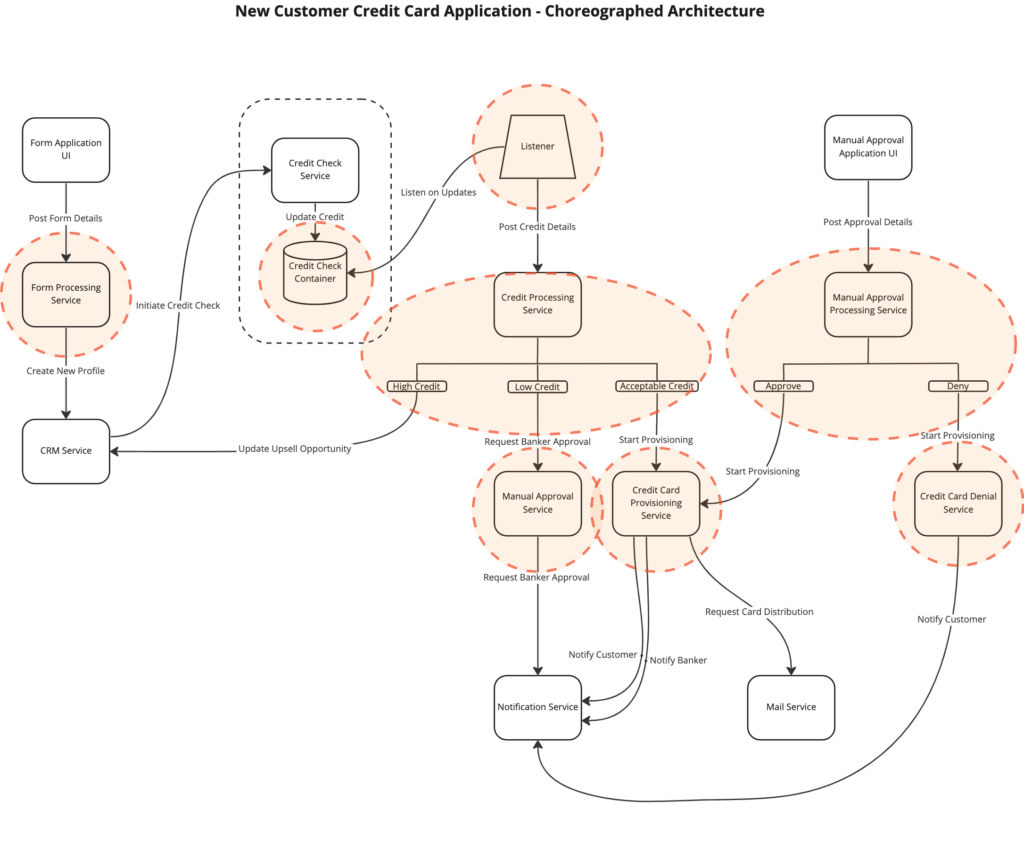

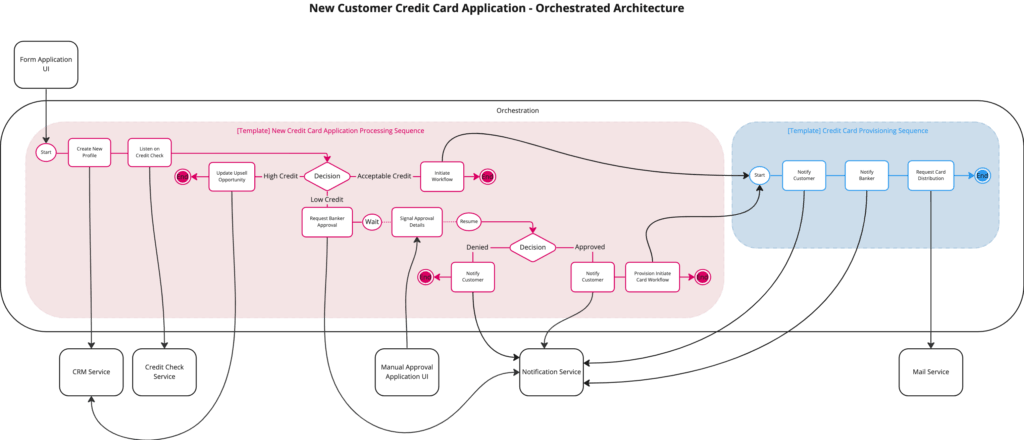

In the diagrams below, we are showing a rather simple hypothetical use case of a credit card application for a new customer in a potential choreographed architecture versus a potential orchestrated architecture. When reviewing the diagrams, take note of the elements that were replaced by the orchestration (marked in orange dotted circles).

New Customer Credit Card Application Hypothetical Use Case

Let’s deep dive into this scenario. A new application is made via an online form, which kicks off our workflow. We start off with a new CRM (Customer Relationship Management) profile creation, followed by a credit check (which may take a long time to process). Once the credit borrowing capacity has been determined, we then continue with three different potential flows. If the credit score was “acceptable”, being in the correct borrowing bracket, we want to provision the card by sending notifications to both the banker and the customer, and mailing the card to the customer.

Otherwise, if the credit score was “low”, that lengthens the process by requiring a manual approval from the banker that is conducted by notifying the banker and having the banker approve it via another online form. Once approved, the same provisioning process kicks off. If the banker disapproves, the customer is notified.

The last scenario is for unique cases when the customer’s borrowing capacity is actually higher than what they requested. In that case, in this hypothetical business process, we only mark the customer for an upsell opportunity via the CRM and let the CRM take it from there.

In the choreographed variation, we could immediately point out a few disadvantages.

An obvious one is the fact that understanding what the actual process is, at a glance, is not that straightforward; and monitoring this complex sequence thus becomes slightly more challenging.

This architecture can still work very well as it is, but it would require a solid foundation of logging in each service and alerting when steps in the sequence fail. However, what would make this architecture hold the biggest disadvantage would be any need for retries due to service limits in case of large volumes of requests. If we add queues or listeners to this diagram it will only get harder to grasp and we will have a lot of infrastructure to maintain.

On the flip side, the orchestrated variation gives us a very handy eagle eye view of the entire business logic. We can also quite quickly identify any repeated sub flows due to them being captured in their own templated sequence of tasks. Another visual difference is the fact that the microservices’ arrows go back and forth to and from the orchestration, rather than interacting with each other, which means that the microservices can just concentrate on themselves and not have to worry about any other service’s positioning in the sequence; that logic moves directly into the orchestration.

The disadvantages of the choreographed architecture become the strengths of the orchestrated architecture, with centralised ability to monitor, debug, retry and process tasks, as well as decouple this infrastructure from the microservices. In a case like this, introducing orchestration may very well be worth the overhead.

Deep dive: Evaluating an orchestration tool

In theory, orchestration seems to solve a fair few of the issues that come with a microservice architecture by incorporating some of the benefits of the monolith. But, as we discussed prior, theory doesn’t always work in practice. At the end of the day, the effectiveness of the orchestration solution will depend on how well the chosen orchestration tool meets our expectations. Below, we have compiled a set of criteria that we expect an orchestration tool to tick.

Various Task Handling

First and foremost, the orchestration tool must be able to coordinate with services using a variety of integration patterns:

- Simple request/response = the tool in its most basic form needs to know how to hit an API, process the response and use the result in the next task. For instance, using a profile ID that is returned from a POST request to a CRM service in the next step of requesting a credit card check for that ID. This would include support for different types of headers and authentication.

- Long polling = the tool should be able to long poll a service, such as a credit check service, as that would remove a significant level of complexity that would come from having to spin up such infrastructure ourselves.

- Async pub/sub = as another option of resolving long running tasks, the tool should provide a facility to listen to push updates from a microservice.

- Manual Step = the tool should have the ability to wait for a long running task for an indeterminate amount of time until said task is manually resolved. For example, a manual credit limit approval task that gets initiated by the orchestration tool and causes the sequence to halt [Note the diagram below]. The tool is expected to be able to wait indefinitely until the banker manually approves the credit limit, consequently triggering the approval service to signal the tool that the approval task has been completed.

Reusability, Decisions, Forks, Joins

An orchestration tool must be able to deal with workflows that have more complicated logic without having to constantly redefine the same parameters or sequences. The tool should support reusing definitions of sequences in case those sequences are repeated in close to identical scenarios. For instance, provisioning a credit card can be triggered when a low credit score has been manually approved or when the credit score was automatically deemed acceptable without a need of a banker review. Instead of having to define the same flow twice, we would prefer the tool to use the same sequence template for both entry points as a subflow.

The tool should also be smart enough to deal with multiple possibilities of flows depending on the output of previous tasks. In the case of a credit check, the tool should support a fork of three different flow pathways depending on whether the credit check was “low”, “acceptable” or “high”. In other cases, the sequence may need to fork every time it is run due to tasks that need to be done in parallel. The tool should allow those tasks to then join at the end and combine their outputs.

Although we have not included this in our credit card use case above, we may think of a scenario where the credit check consists of two microservices running at the same time and the score is then determined by combining both outputs.

Fault Tolerance, Repairability, Monitoring

Needless to say, bringing all the business logic into a one tool to rule them all comes with great responsibility. Since the tool is meant to centralise as much of the workflow-related logic as possible, we expect the tool to handle all retries, delays, backoffs and debounces. These should be able to be configured for each task. When errors occur within a task, the tool must retry as per the config, or allow halting the flow until the associated microservice is fixed, to then resume execution.

In addition, the tool must provide visibility over all running tasks, whether they are at a green working state or they have erred. That includes providing monitoring that shows the current state of each sequence, the input and output of each task in the sequence, and the amount of retries or delays that were required for each task to complete.

State Management, Versioning

The orchestration tool must remain resilient in the case of an internal change within itself, including internal errors within the tool’s infrastructure, or changes to the tool’s operation. If, say, the tool’s service goes down momentarily while sequences are running, it must be able to resume them once it is back up. This can be possible with state management in place that continuously saves the progress of any workflows. When the tool has been upgraded, the new tool’s functionality must ensure backwards compatibility so that currently running sequences are unaffected.

In a similar case, the tool is advised to support versioning of workflow definitions so that we can change the configuration or sequence of steps for a workflow template, and hopefully, allow for currently running instances of that workflow to complete without being affected.

Maturity

Having all of the above is important to the success of the orchestration tool within a microservices architecture. But what is important to the success of developers adding that tool to their current or future implementation, is documentation. Good documentation should include instructions to the above functionality, enough solutions to known bugs in community threads, and, hopefully, a few sample projects that can be used for reference. In order to introduce the tool to a real project, we would prefer it to be in a stable state, with an official release and a few other companies using it.

The most popular orchestration tools currently in the scene are Temporal, Conductor, Camunda, PEGA and Cadence. These are solid choices created by large tech companies such as Netflix and Uber, have big brands using them like Github, Tesla, Slack, Datadog, Atlassian and Google, and claim to satisfy these criteria.

Conclusion: Assessment outcome and next steps

It must now feel enticing, adding an orchestration tool to an existing microservices architecture and playing with it. From our assessment, we did conclude that orchestrating microservices is a good idea in scenarios involving a complex and potentially repetitive sequence of tasks.

However, this assessment alone cannot instil the confidence required to propose adding orchestration in a real life project, primarily because the idea of orchestration can ever only be as good as the chosen tool. Thus, our next step is a PoC (Proof of Concept) project trialing at least two different tools using real code. For this PoC, we will be using the same New Customer Credit Card Application use case as was explained above (maybe slightly tweaked), and we will be testing out each tool’s ability to orchestrate a sequence of mock microservices.

The next chapters in this blog series will go through the orchestration tools PoC in more detail. Stay tuned!