By Brendan Wilkinolls

The advent of Artificial Intelligence (AI) has brought about revolutionary changes in virtually every sector of society. AI offers innovative solutions and uncovers new possibilities, leading to the digital transformation of many industries. However, the promising capabilities of AI are accompanied by significant challenges, particularly in cybersecurity. With the rapid proliferation of machine learning (ML) models in daily operations, securing these workflows has become essential to protect businesses and customers from emerging threats. Today, we delve into one such threat: adversarial AI.

How Adversarial AI threatens your Machine Learning Models

In simple terms, adversarial AI refers to a set of techniques used to deceive AI systems. By exploiting vulnerabilities in the AI models, adversaries can trick these systems into making erroneous decisions that favour the attacker’s motives1. These techniques have become alarmingly prevalent in recent times. In 2022 alone, 30% of all AI cybersecurity incidents utilised adversarial techniques2.

Despite the clear and present danger, most organisations are woefully underprepared. In a sobering survey conducted by Microsoft, it was found that roughly 90% of companies had not implemented any strategies to account for Adversarial AI attacks3. Considering the potential damage these attacks can inflict, this is particularly worrying. Every adversarial AI-related attack has the potential to be classified as a ‘Critical’ incident to an organisation’s cybersecurity4, causing substantial damage to both reputation and revenue.

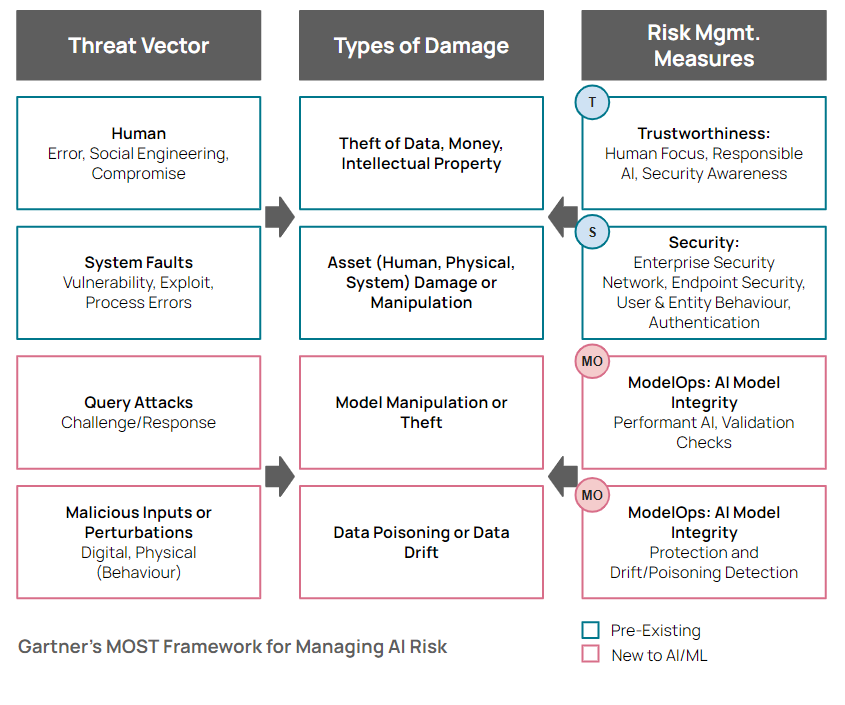

Understanding the threat of adversarial AI requires us to examine the unique risks that AI introduces to cybersecurity. One helpful perspective is Gartner’s MOST framework4, which categorises these risks into four domains:

- Model: This includes risks related to the accuracy, fairness, and potential bias of the AI model, as well as its vulnerabilities to adversarial attacks. Are the models robust enough to withstand adversarial manipulation? Are they unintentionally biassed or unfair in their operations?

- Operations: Operational risks revolve around data privacy, security, and compliance. How is the AI system handling sensitive data? Is it in compliance with the relevant regulations and standards?

- Strategy: Strategic risks concern the alignment of AI initiatives with business objectives and ethical considerations. Is the AI deployment in line with the organisation’s overall strategy and ethical stance?

- Technology: Technological risks encompass potential system vulnerabilities, tech maturity, interoperability, and resilience against attacks. Is the technology robust enough to withstand sophisticated attacks? Is it mature and interoperable with existing systems?

Adversarial AI presents a significant threat across all these domains, making it a formidable challenge for any organisation.

Current Gaps in Cybersecurity Solutions

Despite the advancements in cybersecurity solutions, there remain substantial gaps that leave organisations vulnerable to adversarial AI. Cybersecurity has traditionally been focused on protecting networks, devices, and software applications from threats. However, AI brings in a new dimension to cybersecurity, necessitating novel approaches to defence.

Many existing solutions overlook AI-specific considerations such as:

- Authentication: How do we ensure that the AI system interacts only with verified users or systems? This is particularly important when the AI system has access to sensitive data or critical functions.

- Separation of Duty: AI systems often have broad access to data and functionalities. Implementing a separation of duties can minimise the potential damage in case of adversarial attacks.

- Input Validation: AI systems are particularly susceptible to input manipulation attacks, such as adversarial examples where the input is subtly modified to mislead the AI system.

- Denial of Service Mitigation: As with any system, AI models and services need to be protected against denial-of-service attacks, where the system is overwhelmed with requests leading to a shutdown.

Without addressing these concerns, AI/ML services are likely to remain vulnerable to adversaries of varying skill levels, from novice hackers to state-sponsored actors.

Overcoming the Challenges: Key Elements of Secure AI

To create robust defences against adversarial AI, we must weave security into the fabric of our AI systems. Here are four key elements to consider:

- Bias Identification: AI systems should be designed to identify biases in data and models without being influenced by these biases in their decision-making process. Achieving this requires continuous learning and updating of the system’s understanding of biases, stereotypes, and cultural constructs. By identifying and mitigating bias, we can protect AI systems from social engineering attacks and dataset tampering that exploit these biases.

- Malicious Input Identification: One common adversarial AI strategy is to introduce maliciously crafted inputs designed to lead the AI system astray. Machine learning algorithms must therefore be equipped to distinguish between malicious inputs and benign ‘Black Swan’ events, rejecting training data that has a negative impact on results.

- ML Forensic Capabilities: Transparency and accountability are cornerstones of ethical AI. To this end, AI systems should have built-in forensic capabilities to provide users with clear insights into the AI’s decision-making process. These capabilities serve as a form of ‘AI intrusion detection’, allowing us to trace back the exact point in time a classifier made a decision, what data influenced it, and whether or not it was trustworthy.

- Sensitive Data Protection: AI systems often need access to large amounts of data, some of which can be sensitive. AI should be designed to recognise and protect sensitive information, even when humans might fail to realise its sensitivity.

By incorporating these elements into our AI systems, we can build a strong foundation for securing AI against adversarial threats.

Protecting Machine Learning Models with Mantel Group

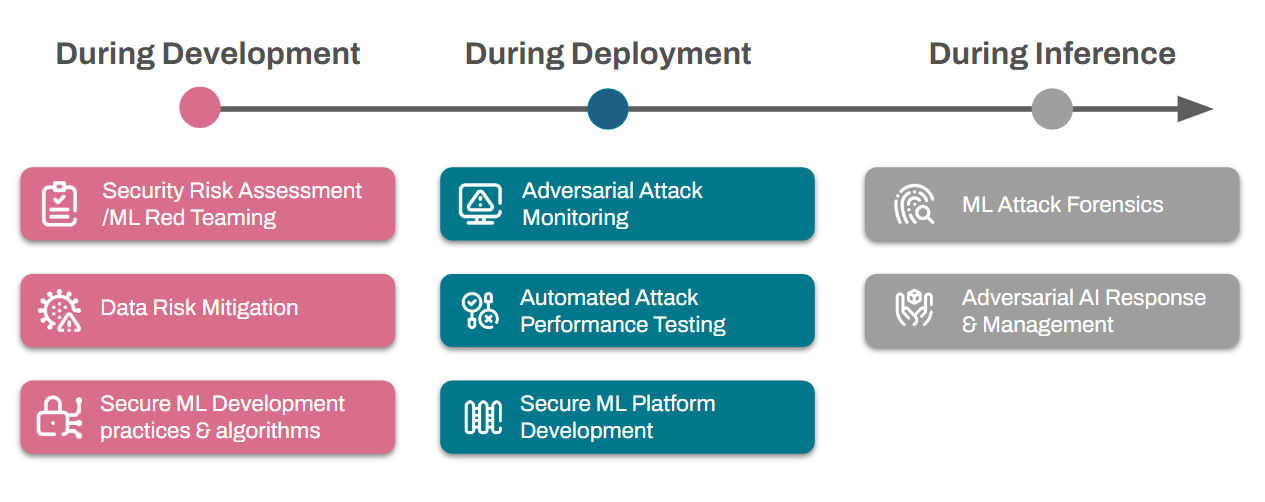

Securing ML models against adversarial AI is not a one-off task but a continuous process that spans across the entire lifecycle of the ML model. At Mantel Group, we understand this and provide holistic solutions to help you tackle adversarial AI.

During the ML Development Stage, incorporating Security Risk Assessment, Data Risk Mitigation, and Secure ML Development practices can help ensure that security is baked into the model from the ground up. At Mantel Group, we can guide you through this process, ensuring that your models are designed with the best security practices in mind.

During the ML Development Stage, incorporating Security Risk Assessment, Data Risk Mitigation, and Secure ML Development practices can help ensure that security is baked into the model from the ground up. At Mantel Group, we can guide you through this process, ensuring that your models are designed with the best security practices in mind.

In the ML Deployment Stage, it’s crucial to have mechanisms for continuous Adversarial Attack Monitoring and Automated Attack Performance Testing. Additionally, Secure ML Platform Development can help protect the deployed models from attacks. Our experts at Mantel Group are experienced in setting up robust monitoring systems and implementing secure platforms that can withstand adversarial attacks.

During an Attack on an ML System, rapid and effective response is key. ML Attack Forensics can help identify the nature and source of the attack, while Adversarial AI Response & Management strategies can help contain the attack and mitigate its impact. Mantel Group can provide you with the necessary tools and expertise to quickly respond to attacks, minimising their impact on your operations.

At Mantel Group, we understand the nuances of AI and Cybersecurity. We can assist your company in each of these areas, helping you become more resilient to adversarial AI attacks. Our custom solutions are designed to address your specific needs, helping you become more resilient to adversarial AI attacks. We believe in building AI systems that are not just smart, but also secure.

Take action

Securing machine learning workflows against adversarial AI is more than a necessity; it’s an obligation to safeguard our businesses and customers. Through a deeper understanding of adversarial AI and its implications, businesses can fortify their AI systems, mitigating the risks in this new frontier of cybersecurity. With the right partner like Mantel Group, businesses can not only navigate these complex challenges but also leverage them to strengthen their AI capabilities.

Get in touch

Let’s enable your cyber

security objectives

References

[1] https://atlas.mitre.org/resources/adversarial-ml-101

[2] https://www.microsoft.com/en-us/security/blog/2020/10/22/cyberattacks-against-machine-learning-systems-are-more-common-than-you-think/

[3] Kumar, R. S. S., Nyström, M., Lambert, J., Marshall, A., Goertzel, M., Comissoneru, A., … & Xia, S. (2020, May). Adversarial machine learning-industry perspectives. In 2020 IEEE security and privacy workshops (SPW) (pp. 69-75). IEEE.

[4] https://www.gartner.com/en/documents/4001144