A Front-Row Seat to AI’s Evolution

Last month, I had the unique opportunity to attend the Web Directions AI 2023 conference. Stepping into the venue, I was immediately enveloped by an electric atmosphere, charged with anticipation and excitement. Attendees weren’t just there to marvel at AI’s advancements; they were there to be part of its evolution. From tech veterans confidently discussing AI nuances to AI novices filled with curious wonder, the room was a melting pot of ambition and aspiration.

As the first session began, a pivotal question hovered in the air: In this expansive AI universe, where exactly do we all stand?

Photo by Halans on Flickr

Photo by Halans on Flickr

Finding Your Place in the AI Ecosystem

There are four types of people in the Generative AI ecosystem:

- Tool Makers: The masterminds crafting the backbone of generative AI, creating revolutionary tools from scratch.

- Productisers: The innovators who are productising generative AI, seamlessly integrating it into products to give them an accelerated edge in the market.

- Market Shapers: The visionary consultants and advisors, guiding businesses on how best to harness the power of generative AI and ensuring its transformative potential is fully realised.

- Consumers: Every one of us, from the tech-savvy teen using ChatGPT to the busy professional optimising daily routines with AI tools, we’re all a part of this narrative.

With our places in the AI world pinned down, attention naturally shifted to the expansive AI product landscape, neatly segmented into three categories: Unicorns, Productisers, and Slashers.

Crafting AI’s Tomorrow with Unicorns, Superchargers, and Slashers

When exploring the expansive AI product landscape, three categories come to the forefront:

- Unicorns: These are the ventures that, often through a mix of innovation and serendipity, aspire to become billion-dollar businesses, reshaping industries with groundbreaking ideas. The potential rewards are vast, but so are the uncertainties. Achieving ‘unicorn’ status can often feel like winning the lottery, based more on luck than strategy. For those navigating this space, it’s essential to understand the difference between seeking unicorn status and building a consistently profitable company, remember the mantra of fast failing – pivot quickly if things don’t align.

- Superchargers: Think of your favourite apps or platforms, but with an AI upgrade. These products have a familiar feel but offer enhanced functionalities courtesy of AI integration.

- Slashers: Efficiency is the game. Whether it’s optimising workflows or automating tasks previously requiring human touch, the goal is clear: leveraging AI to drive down costs without compromising on quality.

Photo by Halans on Flickr

Photo by Halans on Flickr

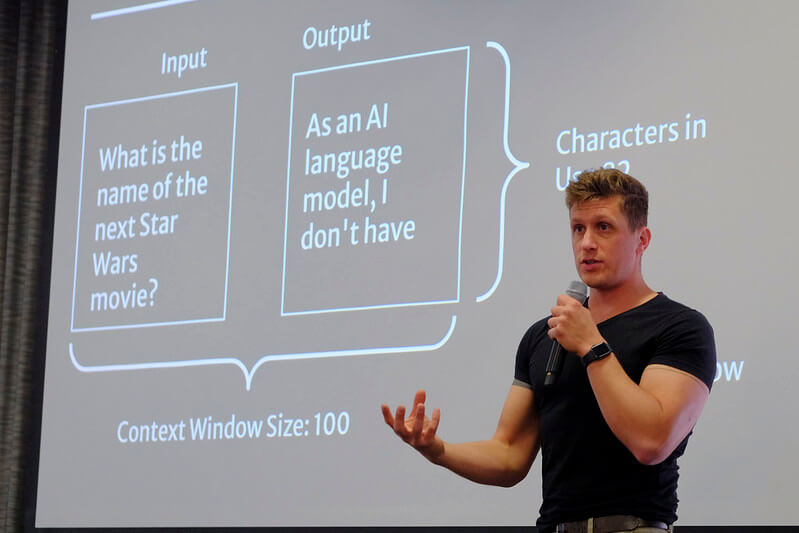

From Data Classification to Creative Synthesis: A Journey with GPT Models

The landscape of artificial intelligence has come a long way from its primary role in data classification. Initially, AI focused on sifting through data to categorise and understand patterns. As its capabilities grew exponentially, it moved beyond interpretation. Today, AI doesn’t just analyse; it creates. This transition has seen AI evolve from a mere analytical tool to an inventive force, capable of producing both original and intricate works.

Photo by Halans on Flickr

Photo by Halans on Flickr

Such advancements owe a lot to models like Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), and diffusion models. While VAEs and GANs recognize and recreate patterns, diffusion models convert abstract noise into tangible visuals. These breakthroughs have unlocked new realms of possibilities in image generation.

The game-changer in text generation and understanding has been the transformers, with their focus on attention mechanisms. Standing tall among them are the Generative Pre-trained Transformers, better known as “GPT” models. These models epitomise text-generation capabilities, processing vast amounts of data to produce coherent and contextually relevant content. Their core functionality allows them to predict the next logical word in a sequence remarkably well. The depth of training these models undergo is immense, refining predictions using data from across the internet. Their “contextual magic” acts as a form of short-term memory, determining the scope of information the model considers during text generation, often referred to as “tokens”.

In this context, a token can represent a character, a word, or even parts of a word. This context window, or token limit, varies among models:

- GPT3 has a 4K token limit.

- GPT4+ extends to 32K tokens.

- Claude2, a newer entrant, impressively reaches up to 100K tokens.

These models are more than their technicalities. Each GPT iteration possesses inherent behaviour or system prompts — the primary guidelines shaping its responses. A fundamental component of this technology is the use of vector databases. Essentially, vector databases convert words, sentences, and even larger pieces of information into numerical vectors in a high-dimensional space. Through these vector representations, the model can quickly access and associate information, making its output more contextually relevant and accurate. For more specialised results, these models can be fine-tuned using custom datasets, ensuring outcomes align with desired intents.

Photo by Halans on Flickr

AI in Action: Real-World Case Studies

Diving into the next session, we shifted our focus to the tangible applications of AI, offering a sneak peek into the future of industries touched by these innovations. From education to broadcasting, AI’s transformative impact is evident. Here are three standout presentations that provided a deeper understanding of AI’s potential in reshaping how we learn and consume information.

1. Microsoft’s AI-Driven Safety and Security Tools

In an era where video content is everywhere, efficiently analysing and summarising this data becomes paramount, especially in sectors like workplace safety and security. Microsoft, recognising the demand, introduced an innovative AI tool that promises a paradigm shift in how we process video data:

- Vector Search Capabilities: The tool enables users to sift through hours of video effortlessly using natural language queries. Searches such as “man running” or “water spilled” bring up relevant footage in moments, eliminating the need for tedious manual scanning.

- Video Summarisation: Instead of combing through extensive footage, users can now generate concise summaries that pinpoint the vital moments, streamlining the review process.

- Safety Enhancement: Highlighting the tool’s practical utility, its ability to quickly identify specific incidents, like “woman falling over”, underscores its potential. Such capabilities ensure timely responses to safety concerns, potentially preventing further accidents or complications.

Below is a demo of their Vector Search capabilities – available to test yourself here.

With such advancements, Microsoft’s solution exemplifies how AI is continuously reshaping our approach to safety and data processing, bridging the gap between extensive datasets and actionable insights.

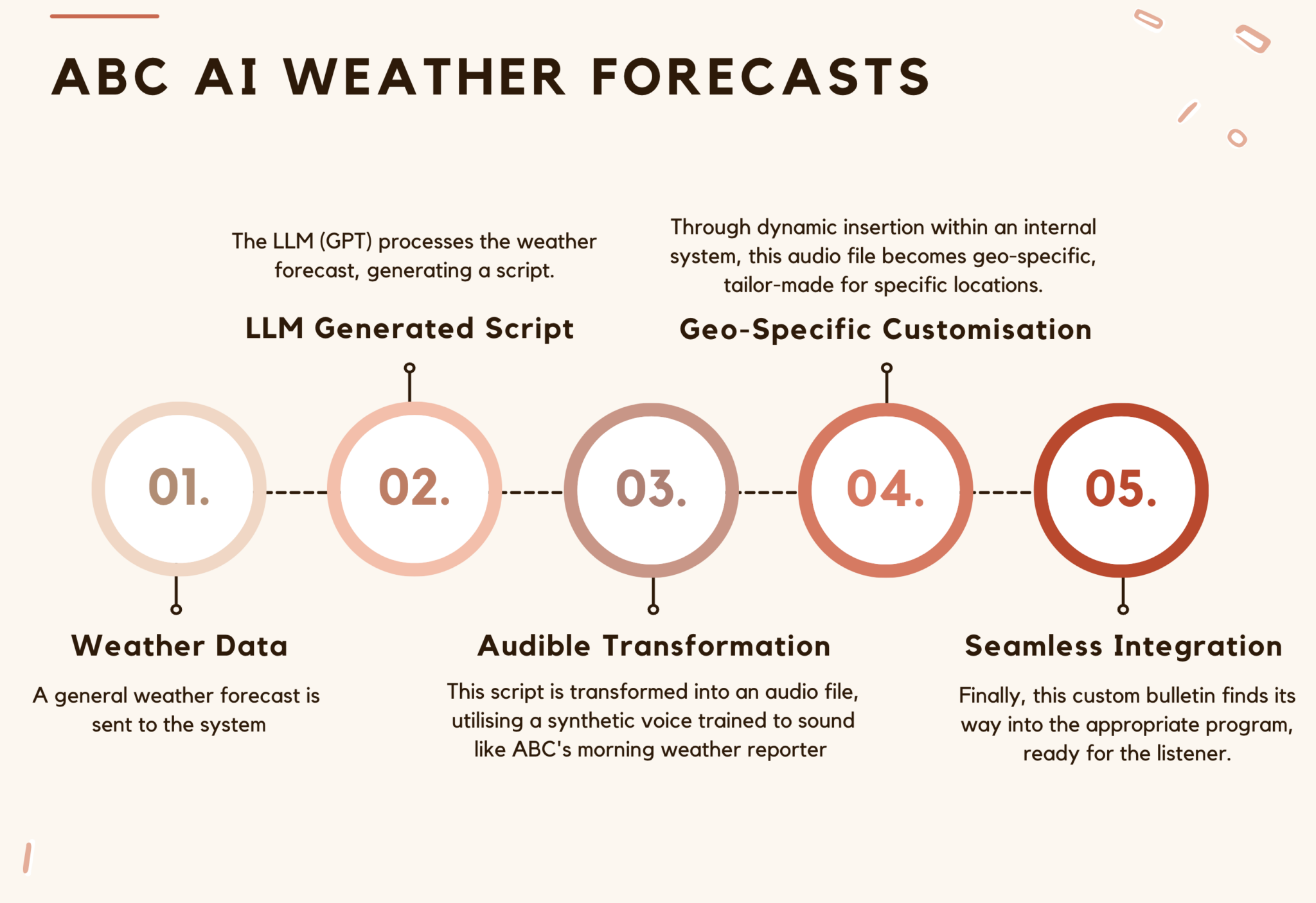

2. Australia Broadcasting Corporation’s Tailored AI Weather Bulletins

Radio broadcasting is a challenging field. Juggling programs, fitting in local updates, and ensuring content relevance can be a very difficult task. Erika, a local radio broadcaster, knew this all too well, especially when it came to weaving in local weather updates. Meanwhile, fishermen tuning in at early hours craved a more targeted update, focusing solely on their local weather rather than the broader scope of, say, all of QLD.

Here’s where ABC’s experiment came into play:

From Raw Data to Tailored Broadcast: This flowchart traces the journey of weather data as it’s transformed by AI into a geo-specific audio bulletin. Each step represents a fusion of advanced technology and human insight, ensuring listeners receive relevant and timely weather updates seamlessly integrated into their program.

ABC’s AI experiment delved into optimising prompts, ensuring accuracy, assessing scalability, and capturing audience feedback. The incorporation of GPT and its chatbot capabilities signified a transformative moment, highlighting a notable shift towards AI in the broadcasting realm.

3. Atomi’s AI-powered Education Journey

The educational terrain is rapidly transforming, and Atomi is at the forefront, harnessing the prowess of Large Language Models (LLMs) to redefine the student learning experience. However, the narrative isn’t just about how AI is used; it’s about how it’s optimised to achieve better results, faster responses, and reduced costs.

- Content Generation: Atomi’s AI is not just a passive tool but actively contributes by generating educational content tailored for learners.

- In-depth Assessment: Beyond merely marking, the platform’s AI delves deep into understanding student responses. It doesn’t just assign a grade; it clarifies the rationale, explaining the correctness or incorrectness of an answer.

- Future Aspirations: The vision for Atomi is grand. They’re steering towards an AI system proficient in evaluating complex student submissions, such as HSC essays and detailed research papers.

Yet, what truly stood out in the demonstration was the optimisation of different AI models to achieve various objectives. To understand the depths of this optimisation, let’s break down their model choices:

| Model | Accuracy (% of Baseline) |

Latency (ms) |

Cost Ratio (Relative to Baseline) |

Viability (Limited / Moderate / High) |

| GPT-3 Finetuned (Baseline) | 100% | 450 | 1x ($30k/year) | High |

| GPT-4 0-shot | 93% | 630 | 0.6x | Limited due to rate limit |

| GPT-4 3-shot | 105% | 700 | 2.0x | Limited due to rate limit |

| PALM 2 Finetuned | 99% | 450 | 1.6x | High |

| RoBERTa Finetuned | 89% | 30 | 0.2x | High |

| RoBERTa GPT-3 knowledge transfer | 97% | 30 | 0.2x | High |

| RoBERTa GPT-4 knowledge transfer | 99% | 30 | 0.2x | High |

The table showcases a comparative analysis based on performance (accuracy), speed (latency), and cost. For instance, while the GPT-3 Finetuned model serves as a baseline in terms of accuracy and cost, models like RoBERTa Finetuned dramatically cut down latency to a mere 30 milliseconds at a fraction of the cost. Moreover, knowledge transfer techniques in RoBERTa models nearly match the baseline’s accuracy but at a significant speed and cost advantage.

For readers with startups eyeing AI integration, Atomi’s approach underscores the importance of model selection — optimising quality while saving on time and costs.

AI Ethics and the Road Ahead

Artificial intelligence (AI) is a rapidly advancing field with applications spanning numerous sectors. As its prevalence increases, so do the ethical concerns associated with its use. Here are key ethical challenges in AI:

- Bias in AI: AI systems often rely on vast data sets for training. If this data contains inherent biases, the resulting AI can perpetuate or exacerbate these biases, potentially leading to skewed or unfair outcomes.

- Unpredictable Outcomes: Many AI systems, once deployed, can produce outcomes that were not entirely anticipated during their design phase. This unpredictability poses challenges in managing and rectifying any unintended consequences.

- Regulatory Challenges: Proper AI regulation requires balancing the promotion of innovation with the assurance of public safety. Diverse regulations across countries further complicate this balance.

- Human vs. Bot Differentiation: Advanced AI systems, especially in natural language processing, make it increasingly difficult to distinguish between human and AI-generated content.

Education plays a pivotal role in addressing these concerns:

AI Education and Its Importance

For society to harness the benefits of AI while minimising potential drawbacks, widespread understanding of AI is crucial.

- Collaborative Learning: As AI becomes more integrated into educational tools, there’s potential for a shift from individual-focused learning to more collaborative approaches.

- Legal & Confidentiality Concerns: The deployment of AI in various industries brings up questions related to responsibility, data protection, and intellectual property rights.

In summary, the integration of AI in our daily lives presents vast opportunities and accompanying challenges. While the technological benefits are clear-cut, the barrier to adoption is culture. As we continue to advance, careful consideration of the ethical implications becomes paramount to ensure responsible development and use of artificial intelligence.

Conclusion

The Web Directions AI 2023 conference was a practical immersion into the evolving world of AI. Beyond the enriching case studies and technical details, the event delved deep into AI’s ethical dimensions and broader implications. While I appreciated the hard knowledge shared, it was these broader themes that provided me a fresh perspective on AI’s potential and challenges.

The sessions emphasised the dual nature of AI: its vast potential to transform industries, alongside the pressing ethical challenges it presents. Such discussions underscored the need for a balanced and considered approach to AI’s development and integration. For me, spending a day immersed in these discussions was invaluable and my understanding and enthusiasm for the AI domain have deepened.

At Mantel Group, we are positioning ourselves to be an integral part of this transformative era. We understand the nuances of the AI landscape and are aware of the role we play in bridging the gap between technological advancements and practical applications. If you’re considering AI integration or looking to enhance your current AI systems – reach out to us, we’d love to help.