1. What is an AI Agent?

AI is evolving toward “agents”; autonomous systems that can solve complex problems and adapt to changing environments without constant human guidance. This represents a significant leap in AI capabilities that makes AI more dynamic, able to respond in real time, and able to solve a wide variety of tasks outside language processing.

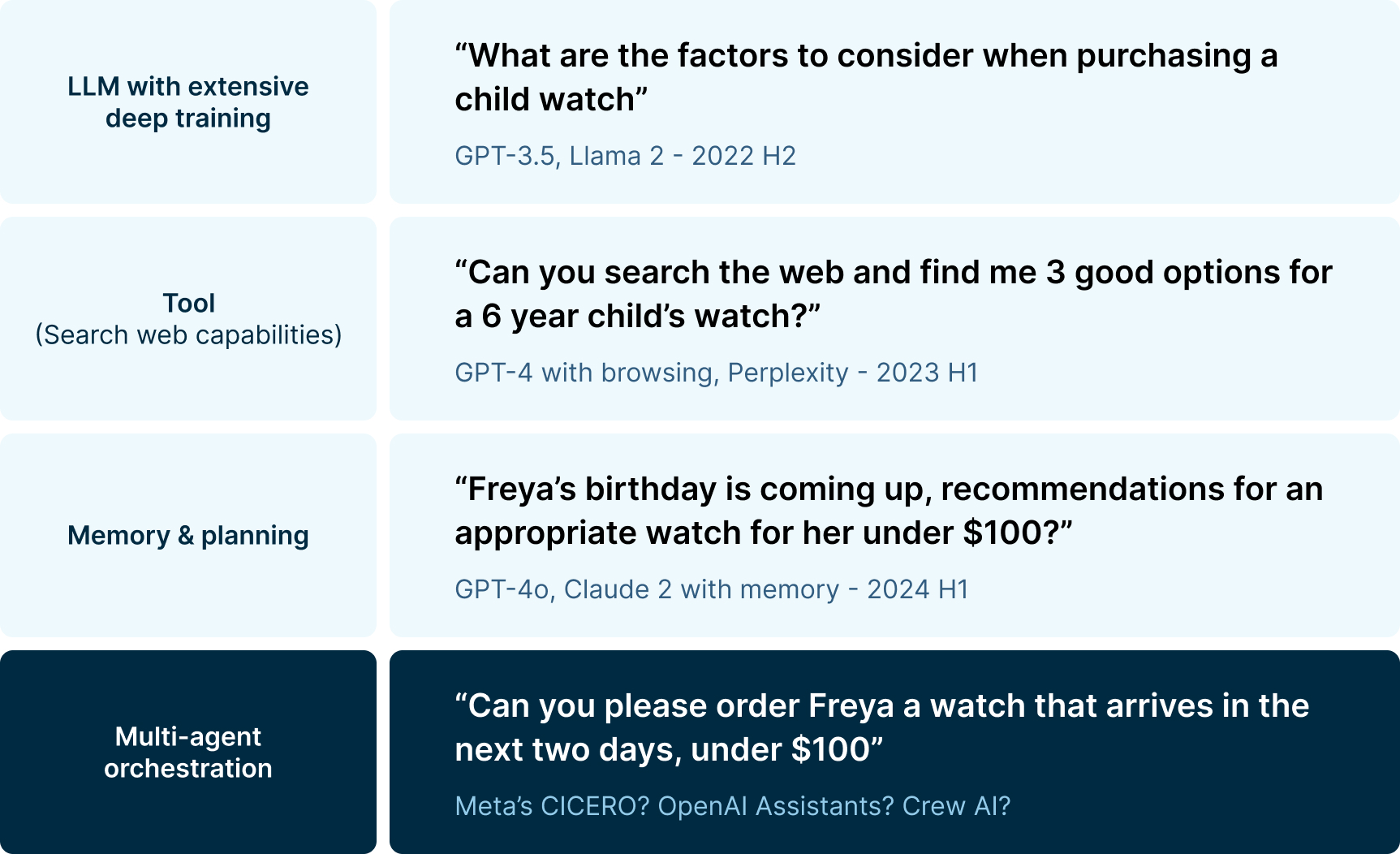

Figure 1- The evolution of LLMs into agents – the agentic building-block, the Large Language Model (LLM), evolved from language models with past knowledge of the world and a narrow scope of ability to dynamic assistants with refreshed knowledge and a wider scope of ability. The LLM unit, on the left, is incrementally enhanced by tooling, memory and planning to produce an agent. Multiple agents working together produce systems that are able to solve tasks high in complexity. Consumer products that possess those enhancements are illustrated on the right hand side of the image.

2. Agents vs. Traditional AI

Traditional AI models require task-specific training to excel at narrow objectives, while generative AI models like LLMs can handle a broader range of tasks through their language understanding capabilities.

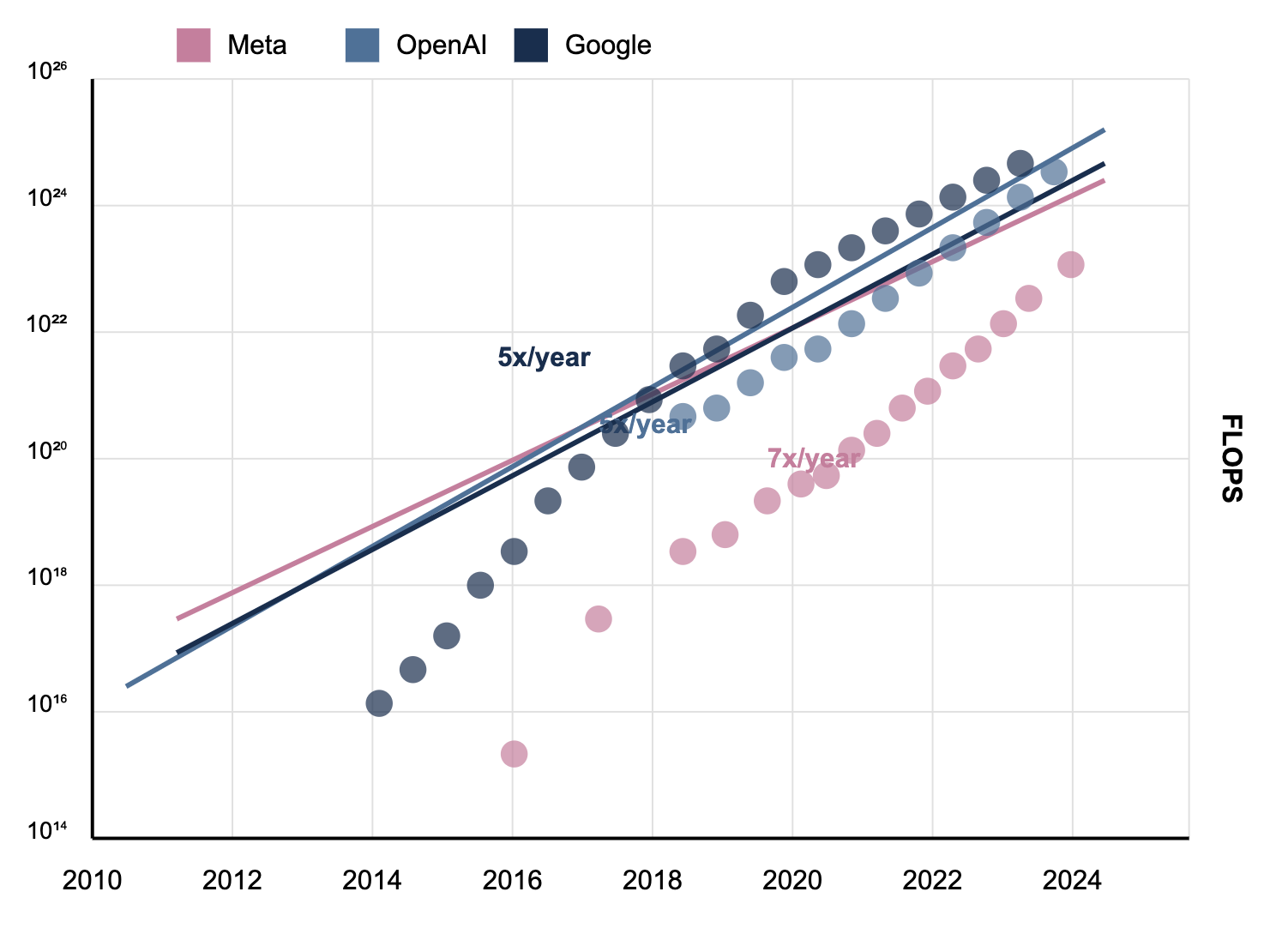

Agent-based AI, a specialised subset of generative AI, takes this further by equipping LLMs with tools, memory, and planning abilities that allow them to tackle diverse objectives with minimal additional training and human oversight. Agents also overcome bottlenecks in intelligence that LLMs face due to compute limitations, as shown below.

Figure 2 – Natural Language Processing (NLP) models and LLMs evolving at a rapid pace. The rapid growth in size and complexity of LLMs has been likened to a new form of Moore’s Law. Example LLMs above have seen their parameters increase exponentially over the past few years, with some models growing by a factor of 10 annually. This growth has plateaued recently due to compute bottlenecks involved in training LLMs, further elevating the importance of agents, which can tackle tasks standalone LLMs cannot.

3. Aren’t agents just LLMs?

Agents are more than just LLMs—they’re enhanced with reasoning frameworks, tools, and memory that infuse them with refreshed knowledge, extended capabilities, and autonomous problem-solving.

Let’s take the example of a claims agent: while a basic LLM might explain insurance policy terms, a claims agent can access your policy details, analyse damage photos, check coverage against specific scenarios, remember your claim history, and orchestrate the entire claims process from submission to resolution.

This integration of capabilities creates a system that doesn’t just respond to questions, but proactively works through complex tasks.

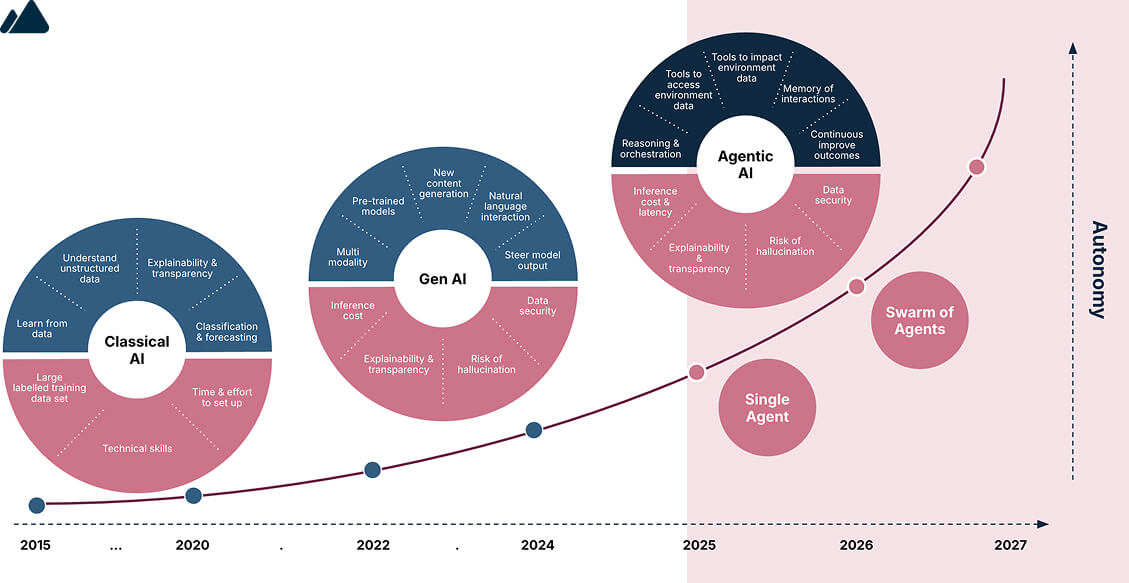

Figure 3 – Classical AI vs LLMs vs Agents – This timeline shows AI’s evolution from rule-based systems to today’s language models, and toward increasingly autonomous agents, with strengths and weaknesses of each milestone of AI in blue and pink, respectively. The vertical axis reveals how AI autonomy increases dramatically as we move from foundation models toward single agents and eventually agent swarms that can collaborate with minimal human oversight.

4. The building blocks of an agent

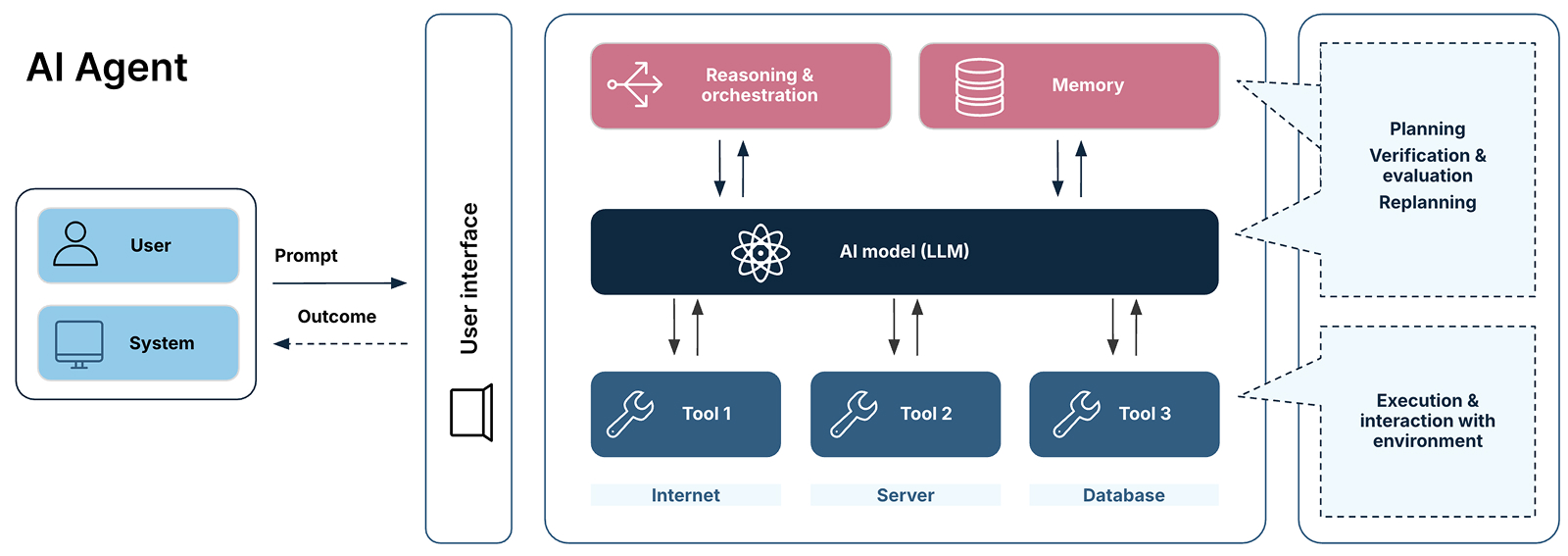

Agents operate by planning, executing, and evaluating tasks. They can even function similarly to “digital twins” of real-world processes, automating and optimising a range of workflows. Key components of an agent include the user interface (UI), the AI model (the “brain”), tools (resources for completing tasks and extending the agent’s knowledge), and memory (a store for remembering past interactions and preferences). The chart below shows how these components interact and what process flows they enable.

Figure 4 – Agent Components – a user or system interacts with an agent through a language query, via an interface. The agent model takes this query and plans its resolution with tools that connect the agent to external environments (i.e. the internet, servers, or databases). The solution and original query can be stored in memory to enhance the user experience later.

5. Agents will transform business processes

While still in the early days, agents have enormous potential for businesses. They can automate processes, generate insights, and even create and improve software. However, not every process should be replaced by an agent; traditional workflows remain optimal for structured tasks with predictable outcomes, while agents excel in scenarios with multiple valid approaches or solutions.

Agents deliver significant value when the cost of manual execution outweighs the investment in automation. Industry leaders predict agents can surpass traditional SaaS by 10x, excelling in specialised, measurable workflows rather than broad automation. Early adopters have the opportunity to define the value model for agents and unlock significant efficiency gains.

6. What are the best applications for agents?

Agents are gaining significant traction in workflows described in Figure 5 below—domains that accommodate some flexibility in precision, response time, and implementation costs.

These applications represent ideal starting points because they leverage LLMs’ core strengths in processing information, generating responses, and synthesising knowledge. We’ll likely see implementations of components of these workflows as single-agent systems, with the potential for end-to-end workflows realised in multi-agent systems.

Figure 5—Ideal applications for agents – Agents are best placed to provide value in ‘vertical’ workflows that focus on single, specialised processes, like knowledge retrieval in customer service. In contrast, a horizontal workflow spans multiple processes, covering the entire customer service function.

7. How do we future proof and hire the right talent?

AI agent development benefits from various roles and skillsets. Consider having a system architect, ideally with AI knowledge, address requirements around cost, scale, latency, security, flexibility to accommodate an evolving tool landscape, and cloud integration when building agentic architectures.

Business analysts might help identify high-impact use cases and collaborate on developing evaluation metrics aligned with business priorities. Data scientists could apply their prompt engineering skills to enhance agent behavior. At the same time, ML engineers, collaborating with software engineers, might focus on optimising the agentic system for production readiness and extensibility. This collaborative approach can help prioritise business outcomes, improve agent performance, and thoughtfully integrate agents within existing business workflows.

When these specialists work together effectively, organisations may better position themselves to realise value while managing risks when implementing agents across business functions.

8. How do we ensure governance and guardrails?

Giving AI agents more control makes business processes streamlined and performant, but it also introduces risks that need to be managed. That’s where governance comes in—it’s not about limiting what agents can do, but about setting up effective guardrails so they operate safely, ethically, and in line with business goals.

Guardrails help prevent risks like bias, errors and compliance issues and ultimately will be key to unlocking the organisational trust required for agents to succeed. Organisations should adopt best practices such as involving multidisciplinary teams in the process of defining a guardrail, defining clear quality metrics across areas of risk, and taking an iterative approach to developing a guardrail strategy.

9. Early Adoption Advantage

It’s clear that businesses that embrace agents early can secure a significant competitive advantage. However, similar to the excitement surrounding GenAI, there is a sweet spot between experimentation and pinpointing optimal use cases for agents that will truly generate value for an organisation.

Start with small, controlled projects where you test the AI agent on specific tasks. These small tests let you see real benefits and validate the technology’s value and feasibility before investing in a full rollout. Then, once validated, gradually add agents to your business processes. This step-by-step approach lets you learn from each phase, make improvements, and only scale up the parts that work well.

10. Focus on Simplicity

It’s better to build something than to wait. Start with simple, modular agents and gradually increase complexity.

Our experience has been to break down the full process into clear, manageable steps. This makes it easier to identify which parts of the workflow require an agent and which do not. If a step involves reasoning or handling different types of data (like text and images together), it’s a good candidate for an AI agent.

Start by building the simplest possible version—one AI model using one tool. Then, experiment with improving prompts before adding extra tools or complexity. Since AI frameworks and tools evolve quickly, keeping your system simple and modular will make it easier to adapt to this evolution over time.

From the outset, consider production and scaling, ensure that observability and measurability are available, and balance this with use cases that will move the value needle.