Published October 10, 2023

Written by Praveen Kumar Patidar

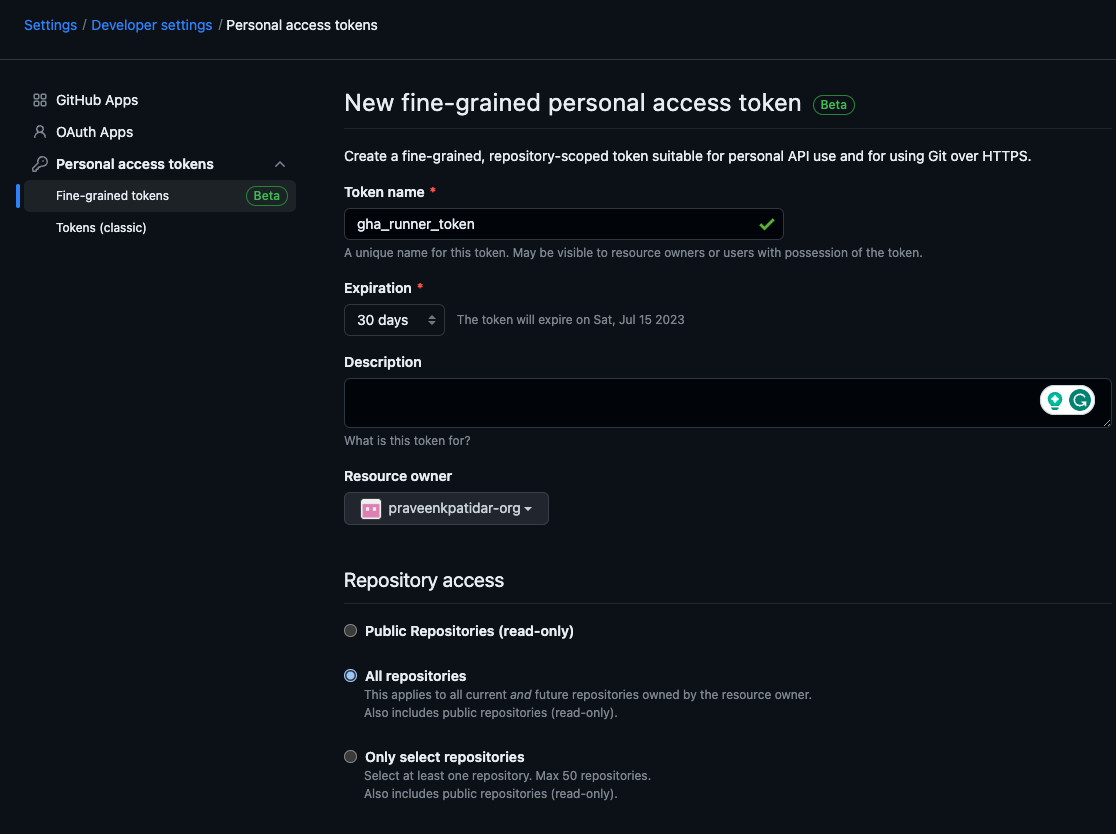

Allow the below permissions to token – (If the Resource Owner is an Organisation)

|

Repository Permissions |

Organisation Permissions |

|---|---|

|

|

Copy and keep the token securely.

tf-apps-core/helm_release.tf Within this file, you will find the organisation of application deployment using helm constructs in Terraform. Included are various sample applications and the deployment of Karpenter. One essential application needed for GitHub Controller is Cert-Manager, which is also outlined in this document.

.

.

.

.

resource "helm_release" "karpenter" {

name = "karpenter"

repository = "https://charts.karpenter.sh/"

chart = "karpenter"

namespace = "platform"

values = [

file("${path.module}/values/karpenter.yaml")

]

set {

name = "clusterName"

value = local.workspace.cluster_name

}

set {

name = "clusterEndpoint"

value = data.aws_eks_cluster.cluster.endpoint

}

set {

name = "aws.defaultInstanceProfile"

value = "eks-${local.workspace.cluster_name}-karpenter-instance-profile"

}

set {

name = "serviceAccount.annotations.eks\\.amazonaws\\.com/role-arn"

value = "arn:aws:iam::${data.aws_caller_identity.current.account_id}:role/eks-${local.workspace.cluster_name}-karpenter-irsa"

}

depends_on = [kubernetes_namespace_v1.platform, helm_release.cert-manager]

}

resource "helm_release" "cert-manager" {

name = "cert-manager"

repository = "https://charts.jetstack.io"

chart = "cert-manager"

namespace = "platform"

version = "1.11.2"

values = [

file("${path.module}/values/cert-manager.yaml")

]

depends_on = [kubernetes_namespace_v1.platform, helm_release.alb]

}

tf-apps-core/actions-runner-controller.tf The file contains the GitHub Action Runner controller application specification. The idea is to prepare the secret first and then launch an application that consumes the secret.

Note: The secret in the real world can be placed in a more secure place e.g. AWS Secrets Manager or AWS SSM Parameters Secure String, however, to reduce the complexity, the solution using plain secure password field via terraform variable. GitHub_token

.

.

.

resource "helm_release" "actions-runner-controller" {

name = "actions-runner-controller"

repository = "https://actions-runner-controller.github.io/actions-runner-controller"

chart = "actions-runner-controller"

namespace = "actions-runner-system"

values = [

file("${path.module}/values/actions-runner-controller.yaml")

]

depends_on = [

kubernetes_namespace_v1.actions-runner-system,

helm_release.karpenter,

helm_release.cert-manager,

]

}

Deploy Core Apps

Before deploying the core app module, you need to set Terraform Variable for github_token as we are using the 3Muskteer pattern, the container runtime prompt for the password when running the deployment command.

Now, run the below command to install the application –

TERRAFORM_ROOT_MODULE=tf-apps-core TERRAFORM_WORKSPACE=demo make applyAuto

You're now on a new, empty workspace. Workspaces isolate their state, so if you run "terraform plan" Terraform will not see any existing state for this configuration. docker-compose run --rm envvars ensure --tags terraform docker-compose run --rm devops-utils sh -c 'cd tf-apps-core; terraform apply -auto-approve' var.github_token github_token supposed to be in environment variable Enter a value:

As the variable is secret, when you paste the PAT value here, you won’t see any characters. Just paste, and hit enter. The outcome should look like below

helm_release.dashboard: Still creating... [20s elapsed] helm_release.actions-runner-controller: Still creating... [10s elapsed] helm_release.dashboard: Creation complete after 24s [id=dashboard] helm_release.actions-runner-controller: Still creating... [20s elapsed] helm_release.actions-runner-controller: Still creating... [30s elapsed] helm_release.actions-runner-controller: Still creating... [40s elapsed] helm_release.actions-runner-controller: Creation complete after 48s [id=actions-runner-controller] Apply complete! Resources: 9 added, 0 changed, 0 destroyed.

The outcome of this step is the core apps including the GitHub Runner Controller and Karpenter are now installed. The setup is ready to create Karpenter provisioners along with GitHub Runners.

Lets test the deployment –

> k get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE actions-runner-system actions-runner-controller-67c7b77884-mvpbm 2/2 Running 0 10m kube-system aws-node-mv7l4 1/1 Running 0 24h kube-system aws-node-t6dhh 1/1 Running 0 9h kube-system coredns-754bc5455d-d4zgg 1/1 Running 0 9h kube-system coredns-754bc5455d-m4lq7 1/1 Running 0 21h kube-system eks-aws-load-balancer-controller-68979c8b74-559vj 1/1 Running 0 13m kube-system eks-aws-load-balancer-controller-68979c8b74-w2dvj 1/1 Running 0 13m kube-system kube-proxy-2v7bb 1/1 Running 0 24h kube-system kube-proxy-8sfqj 1/1 Running 0 9h platform bitnami-metrics-server-6997d6597c-mrzgr 1/1 Running 0 12m platform cert-manager-74dc665dfc-x5b5n 1/1 Running 0 12m platform cert-manager-cainjector-6ddfdc5565-nm8nt 1/1 Running 0 12m platform cert-manager-webhook-7ccc87556c-5nbdw 1/1 Running 0 12m platform dashboard-kubernetes-dashboard-67c5c7b565-gl4tr 0/1 Pending 0 10m platform karpenter-59cc9bb69-tn594 2/2 Running 0 11m platform karpenter-59cc9bb69-wwrw7 2/2 Running 0 11m > kubectl get nodes NAME STATUS ROLES AGE VERSION ip-10-0-14-205.ap-southeast-2.compute.internal Ready 24h v1.25.9-eks-0a21954 ip-10-0-23-87.ap-southeast-2.compute.internal Ready 9h v1.25.9-eks-0a21954

Note: Currently, the dashboard app is in a pending state due to the NodeSelector config. It is waiting for a node launched by Karpenter to become available. However, since you do not have Karpenter Provisioner at the moment, the application will continue to remain in the pending state.

apiVersion: karpenter.sh/v1alpha5

kind: Provisioner

metadata:

name: ${ name }

spec:

requirements:

- key: karpenter.sh/capacity-type

operator: In

values: ["spot"]

- key: node.kubernetes.io/instance-type

operator: In

values: ["t3.xlarge"]

kubeletConfiguration:

clusterDNS: ["172.20.0.10"]

containerRuntime: containerd

limits:

resources:

cpu: 1000

providerRef:

name: ${ provider_ref }

ttlSecondsAfterEmpty: 60

ttlSecondsUntilExpired: 907200

labels:

creator: karpenter

dedicated: ${ taint_value }

taints:

- key: dedicated

value: ${ taint_value }

effect: NoSchedule

tf-apps-gha/karpenter_provisioner.tf The file contains the creation of Provisioner using the template above –

.

.

resource "kubernetes_manifest" "gha_provisioner" {

computed_fields = ["spec.limits.resources", "spec.requirements"]

manifest = yamldecode(

templatefile("${path.module}/values/karpenter/default_provisioner.tftpl", {

name = "github-runner",

provider_ref = "default-provider",

taint_value = "github-runner"

})

)

}

resource "kubernetes_manifest" "cluster_core_provisioner" {

computed_fields = ["spec.limits.resources", "spec.requirements"]

manifest = yamldecode(

templatefile("${path.module}/values/karpenter/default_provisioner.tftpl", {

name = "cluster-core",

provider_ref = "default-provider",

taint_value = "cluster-core"

})

)

}

Karpenter AWSNodeTemplate

Provisioners utilize AWSNodeTemplate resources to manage various properties of their instances, including volumes, AWS Tags, Instance profiles, Networking, and more. It’s possible for multiple provisioners to use the same AWSNodeTemplate, and you can customize the node template to fit your specific workload needs – for instance, by creating nodes specifically for Java workloads or batch jobs.

tf-apps-gha/values/karpenter/default_nodetemplate.tftpl The template file for AWSNodeTemplate. In this demo, we will use one AWSNodeTemplate.

---

apiVersion: karpenter.k8s.aws/v1alpha1

kind: AWSNodeTemplate

metadata:

name: ${ name }

spec:

blockDeviceMappings:

- deviceName: /dev/xvda

ebs:

volumeSize: 100Gi

volumeType: gp3

deleteOnTermination: true

encrypted: true

tags:

karpenter.sh/discovery: ${ cluster_name }

instanceProfile: ${ instance_profile_name }

securityGroupSelector:

# aws-ids: ["${ security_groups }"]

Name: ${ security_groups_filter }

subnetSelector:

Name: ${ subnet_selecter }

tf-apps-gha/karpenter_provisioner.tf The file contains the creation of AWSNodeTemplate using the template above –

.

.

resource "kubernetes_manifest" "gha_nodetemplate" {

manifest = yamldecode(

templatefile("${path.module}/values/karpenter/default_nodetemplate.tftpl", {

name = "default-provider"

cluster_name = local.workspace.cluster_name

subnet_selecter = "${local.workspace.vpc_name}-private*",

security_groups = "${join(",", data.aws_security_groups.node.ids)}",

security_groups_filter = "eks-cluster-sg-${local.workspace.cluster_name}-*"

instance_profile_name = "eks-${local.workspace.cluster_name}-karpenter-instance-profile"

})

)

}

.

.

---

apiVersion: actions.summerwind.dev/v1alpha1

kind: RunnerDeployment

metadata:

name: ${ name }

namespace: actions-runner-system

spec:

template:

spec:

organization: ${ organization }

group: ${ group }

serviceAccountName: ${ service_account_name }

labels:

- actions_runner_${ environment }

- default

- ${ cluster_name }

resources:

requests:

cpu: 200m

memory: "500Mi"

dnsPolicy: ClusterFirst

nodeSelector:

dedicated: github-runner

tolerations:

- key: dedicated

value: github-runner

effect: NoSchedule

GitHub HorizontalRunnerAutoscaler

HorizontalRunnerAutoscaler is a custom resource type that comes with the GitHub Action Runner controller application. The resource used to define fine-grained scaling options. More reading av

tf-apps-gha/values/github-runners/default-runner-hra.tftpl This is the template file for HorizontalRunnerAutoscaler. You can define scaleTargetRef for RunnerDeployment.

---

apiVersion: actions.summerwind.dev/v1alpha1

kind: HorizontalRunnerAutoscaler

metadata:

name: ${ name }-hra

namespace: actions-runner-system

spec:

scaleDownDelaySecondsAfterScaleOut: 600

scaleTargetRef:

kind: RunnerDeployment

name: ${ name }

minReplicas: 1

maxReplicas: 10

metrics:

- type: PercentageRunnersBusy

scaleUpThreshold: '0.75' # The percentage of busy runners at which the number of desired runners are re-evaluated to scale up

scaleDownThreshold: '0.3' # The percentage of busy runners at which the number of desired runners are re-evaluated to scale down

scaleUpFactor: '1.4' # The scale up multiplier factor applied to desired count

scaleDownFactor: '0.7' # The scale down multiplier factor applied to desired count

Here is Terraform resource file to deploy both RunnerDeployment and HorizontalRunnerAutoScaler

tf-apps-gha/github-runners.tf

resource "kubernetes_manifest" "default_runner_test" {

computed_fields = ["spec.limits.resources", "spec.replicas"]

manifest = yamldecode(

templatefile("${path.module}/values/github-runners/default-runner.tftpl", {

name = "default-github-runner",

cluster_name = local.workspace.cluster_name,

service_account_name = "actions-runner-controller",

environment = terraform.workspace,

group = local.gh_default_runner_group,

organization = local.gh_organization

})

)

}

resource "kubernetes_manifest" "default_runner_test_hra" {

manifest = yamldecode(

templatefile("${path.module}/values/github-runners/default-runner-hra.tftpl", {

name = "default-github-runner",

})

)

}

Deployment

Time to deploy! Run the below command to deploy the Karpenter Provisioners and Github Runners together –

NOTE: Change the Organisation Name in the local.tf with the name of your organisation.

TERRAFORM_ROOT_MODULE=tf-apps-gha TERRAFORM_WORKSPACE=demo make applyAuto

kubernetes_manifest.gha_nodetemplate: Creating... kubernetes_manifest.cluster_core_provisioner: Creating... kubernetes_manifest.default_runner_test_hra: Creating... kubernetes_manifest.gha_provisioner: Creating... kubernetes_manifest.gha_nodetemplate: Creation complete after 3s kubernetes_manifest.default_runner_test_hra: Creation complete after 3s kubernetes_manifest.gha_provisioner: Creation complete after 3s kubernetes_manifest.cluster_core_provisioner: Creation complete after 3s kubernetes_manifest.default_runner_test: Creating... kubernetes_manifest.default_runner_test: Creation complete after 8s Apply complete! Resources: 5 added, 0 changed, 0 destroyed.

To delete the dashboard app deployment, simply wait for one minute. Afterwards, you will notice that the node labelled as cluster-core has been removed. This happens because the Dashboard was the only app running on that node. Once the app is removed, the node becomes empty and Karpenter automatically deletes it based on the provisioner’s one-minute configuration.

> k delete deploy dashboard-kubernetes-dashboard -n platform deployment.apps "dashboard-kubernetes-dashboard" deleted > # Wait for a minute > k get nodes NAME STATUS ROLES AGE VERSION ip-10-0-21-160.ap-southeast-2.compute.internal Ready 8m8s v1.25.9-eks-0a21954 ip-10-0-23-87.ap-southeast-2.compute.internal Ready 32h v1.25.9-eks-0a21954 ip-10-0-8-43.ap-southeast-2.compute.internal Ready 8h v1.25.9-eks-0a21954

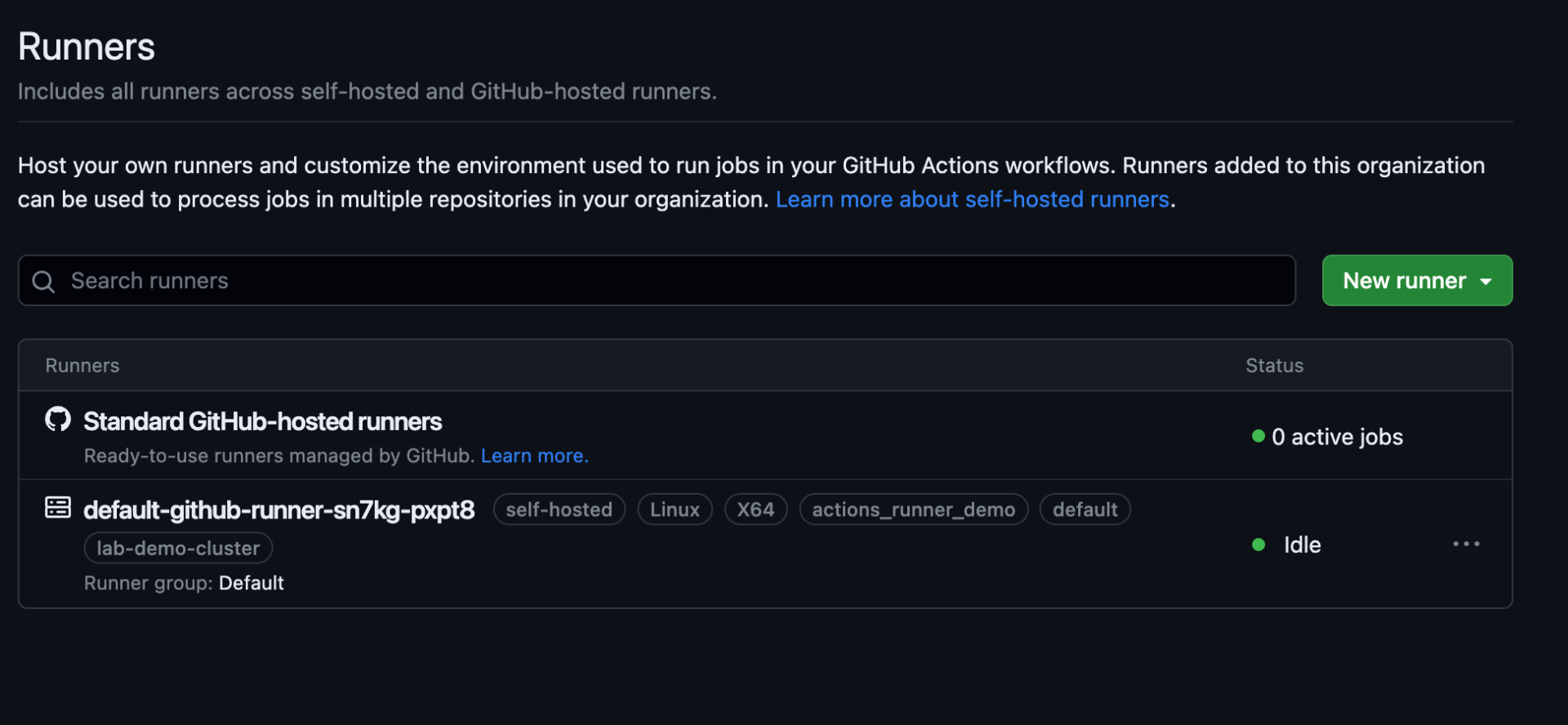

GitHub Runners

To test runners, first, see the github runner default pod running in actions-runner-system namespace. The result should be an action-runner-controller along with the default GitHub runner. The single runner is running as per of specification in the HorizontalRunnerAutoscaler minimum count.

> k get po -n actions-runner-system NAME READY STATUS RESTARTS AGE actions-runner-controller-67c7b77884-9mpfg 2/2 Running 0 82m default-github-runner-sn7kg-pxpt8 2/2 Running 0 5h19m

Let’s see the runner in the GitHub Console –

Note: The URLs may not be accessible as the organisation is limited

As we have registered the runner with Organisation, in the default runner group, you can see runners listed in the organisation settings –

https://github.com/organizations/<ORGANIZATION NAME>/settings/actions/runners

As the default runner group is configured with all repository access in the GitHub organization, the runner must be available in the repository – Let’s check in test-workflow repo in the organization

https://github.com/<ORNIZATION NAME>/<REPO NAME>/settings/actions/runners

Now you are ready to run workflow by using the tags of the runner as listed in the above image along with self-hosted – e.g.

name: sample-workflow

on:

push:

branches: [ "main" ]

pull_request:

branches: [ "main" ]

workflow_dispatch:

jobs:

build:

runs-on: [ self-hosted , actions_runner_demo, lab-demo-cluster ]

steps:

- uses: actions/checkout@v3

- name: Run a one-line script

run: echo Hello, world!