Published February 9, 2020

Written by Sam Kosky

Background

A leading travel and tourism business has transformed the customer experience, addressed pressing regulatory requirements, and positioned itself to reap a data-infused competitive edge following a major data modernisation program. Replacing a struggling AWS solution with a richly featured Microsoft Azure foundation, they took an agile approach, working with Mantel Group to make the giant leap in just a matter of weeks.

Challenges with the old solution

-

Data lineage – Documentation was inconsistent and disparate at best. There were major problems with this approach. Firstly from a regulatory perspective, a global business is required to comply with Europe’s General Data Protection Regulation (GDPR) as well as other regulatory rules around the world. This is difficult without understanding and monitoring how data is being moved and transformed.

-

Data quality – Data coming into the organisation was not understood or controlled for data quality. It was then modified or manipulated without traceability before being stored and again modified or manipulated. When analysts then attempted to use this information, they were unable to be certain of any information, and if errors were identified in reporting, they were altered at the report level – again without traceability.

-

No single source of truth – As there was no single trusted place to go for information, the business had to rely on anecdotal evidence to inform decisions rather than business validated data.

-

Slow data pipelines – Because data was manipulated by multiple areas of the business, the time from data collection to insights was way too slow.

-

Expensive and difficult to maintain – all business logic lived in stored procedures and there was no way of telling if duplicated logic existed. Employees regularly referred to their BI environment as a ‘data swamp’ – people had to wade through to find what they were looking for.

The Solution

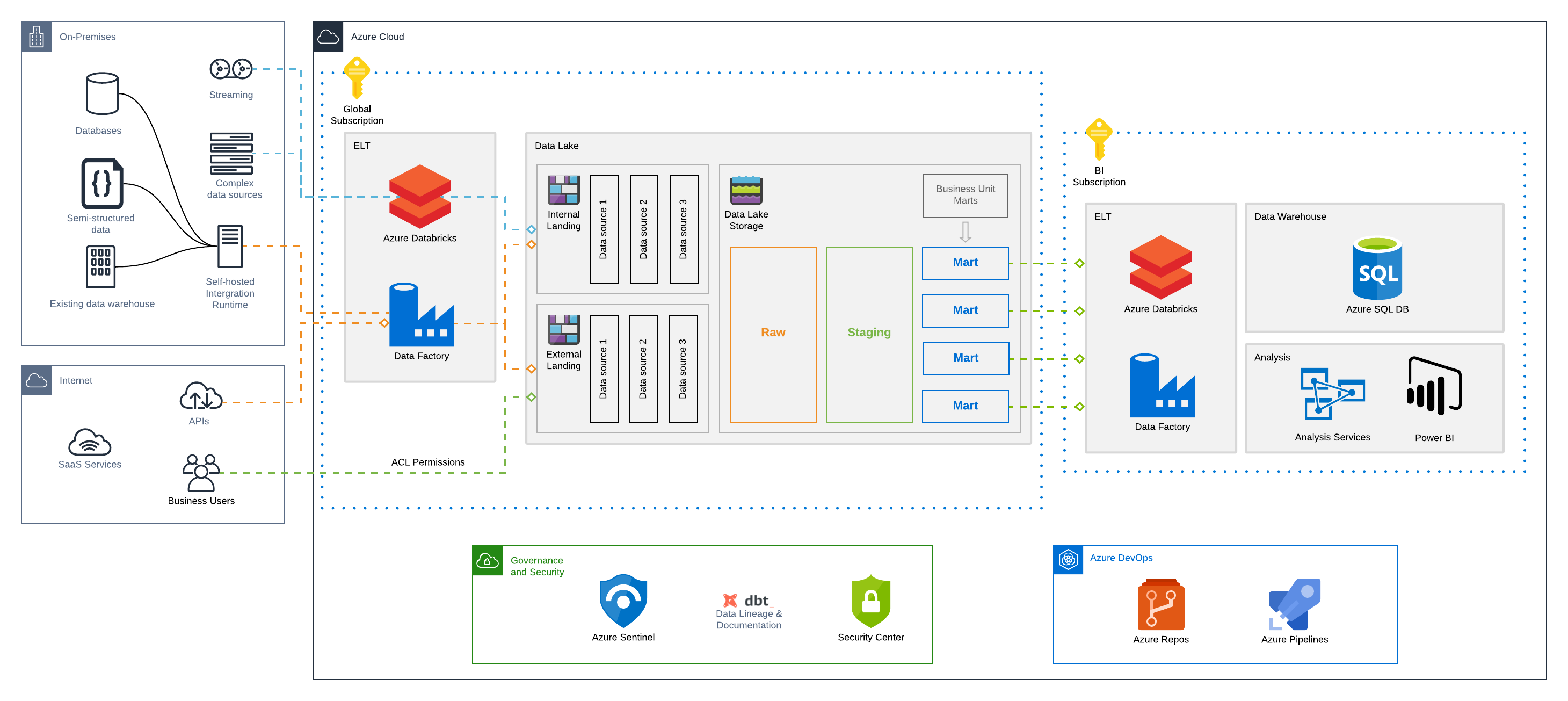

The new Azure platform solution architecture is show below.

The Outcomes

Scalable Architecture

Azure managed services such as Databricks, Data Factory, Data Lake, Azure SQL and Power BI have allowed the business to scale this solution globally, and fast. The Data Lake can be spread across multiple regions to comply with GDPR and new business units, along with their data can be on-boarded in a matter of days by utilising Infrastructure as Code and existing data pipeline templates.

Data Governance

Microsoft Azure supports a truly global data lake with governance assured through Azure global identity and access management platforms. The ability to have a multi-geography data lake and visualisation layer was key for being able to deliver a global, resilient GDPR-compliant solution.

dbt (data build tool) has allowed the data team to share all data documentation between business units as well as track the lineage of their data for every source throughout their data pipelines.

Data Accuracy & Integrity

The majority of source flat files are ingested through Azure Databricks. Python Notebooks contain code to reject or quarantine malformed data, and archive valid data. Azure Data Factory is then responsible for triggering Logic Apps which will notify the BA’s responsible for a particular data source in the case of invalid data. This has ensured timely repair of data pipelines.

dbt was used to orchestrate all transformations against the Data Lake and Data Warehouse. This has allowed the data team to run data tests against incoming raw data and various stages of transformed data before being loaded into the Data Warehouse. This ensures that any metrics or KPIs shown in Power BI dashboards are 100% accurate.

Empowered BI Team Members

The new Azure platform combined with Azure DevOps has empowered BI staff to adopt new technologies and techniques, which has fuelled a more proactive approach to their Business Intelligence strategy, as opposed to simply reacting to data pipeline maintenance issues.

Fast Releases to Prod

With the addition of Azure DevOps components such as Azure Repos and Pipelines, the data team was able to speed up their release cycle to production. Utilising Infrastructure as Code has allowed for consistent and predictable behaviour between environments.